Venture Capitalist Makes a Strong Argument for Regulating AI (without meaning to)

How Billionaires... ahem... AI "Will Save the World"

Marc Andreessen has published a long-winded, self-indulgent piece on the future of AI, that includes a broad range of “analysis” on what he thinks people are right—and, mostly, wrong—about regarding their fears about the future of an AI-driven world.

Andreessen is a venture capitalist in Silicon Valley, member of Meta’s (Facebook) board of directors, co-founder of Ning, and a billionaire. His viewpoint has importance (compared to the usual doltishness on Twitter and other social media) because he actually has influence on what could amount to future policy. His article I am about to discuss can be found in full here.

The central theme of his piece is simple: Don’t even think about regulating AI. What prompted me to respond to it in detail is that it perfectly illustrates the primary problem with so-called Big Tech. The vast well of resources available to spokespeople for Big Tech companies or well-known individuals has enabled them to dominate the narrative entirely toward their benefit.

Careening forward with no regard for societal impact is their m.o., as long as the cash continues to flow. Potentially life-saving technologies emerge in only partial form, often causing more harm than good, because their advocates’ only purpose is to prop up their shareholders (and themselves).

If you have read my previous pieces, you will see my criticism of this is a common theme in my writing. I think AI and Machine Learning have enormous potential, a potential we may never see until it is ripped from the possession of these greedy, arrogant folks who are so high on their own wealth, that they are unable to listen to—let alone comprehend—the concerns of studious thinkers, researchers, scientists, and ethicists.

The opening

To begin with, Andreessen is discussing the current state of AI. That is, “a field which combines computer science and robust datasets, to enable problem-solving.” For the most part, he does not mean the “killer robots” version of AI, though he touches upon that briefly, as I do.

Andreessen focuses on the kind of AI used in Large Language Models (LLMs), facial recognition, large dataset analyzers, and the like. These types of AI have been in prolific use globally for some time now, holding household names like ChatGPT and Google Bard, and lesser-known names such as Dataloop or RapidMiner. His stated primary argument is that AI “could be… A way to make everything we care about better.” But, his quieter contention is: only if tech companies are free to do it their own way—immediate consequences be damned—and to profit from it.

Andreessen begins by discussing how the world exists because of human intelligence, and that augmenting such intelligence can only make the world better. While the moral conclusions of what the world is now because of human intelligence are largely debatable, I concede his point that the current standard of living is better in many ways than before as a result of numerous human intellectual achievements (think antibiotics, vaccines, air conditioning, and others). What he proposes AI will do for humanity quickly enters disputable—and in some cases horrific—territory.

Argument 1: Every child will have an AI tutor that is infinitely patient, infinitely compassionate, infinitely knowledgeable, infinitely helpful. The AI tutor will be by each child’s side every step of their development, helping them maximize their potential with the machine version of infinite love.

This is absurd. Even Andreessen seems to think so. He refutes the premise for whatever it is he is calling “the machine version of infinite love” later in his own piece. He writes:

AI is not a living being that has been primed by billions of years of evolution to participate in the battle for the survival of the fittest, as animals are, and as we are. It is math – code – computers, built by people, owned by people, used by people, controlled by people. The idea that it will at some point develop a mind of its own and decide that it has motivations that lead it to try to kill us is a superstitious handwave.

How, exactly, can a machine incapable of deciding it is motivated to kill, still be capable of infinite love or compassion? The two are driven by the same brain functions even if they manifest somewhat differently.

Let’s suppose that an AI did express something representing compassion or love, how would one draw the line between data-driven probabilistic regurgitations and an expression of true feeling? As Javier Hernandez, a research scientist with the Affective Computing Group at the MIT Media Lab, puts it, one key way humans communicate is through looking at facial expressions and body language, and adjusting themselves accordingly. Daniel Goleman points out that essential elements of developing emotional intelligence in people relies in some part on “unspoken feedback,” including even un-gestured cues passed along individually or within a group.

More complicatedly, emotional responses to identical stimuli often appear random within a group of otherwise similar creatures. While researchers continue to narrow down the specific neuron clusters in the brain that respond to emotional cues, they seem less close to understanding why one being will react substantially differently toward the same stimulus than another.

With this sort of ambiguity, it is hard to imagine an objective measure of what various emotional cues might mean. As such, it is also difficult to imagine how AI could properly learn to decipher these cues, especially given that humans themselves do not uniformly react to—or understand—the same stimuli.

Think, for example, of one person who cries in a frightening situation while another might laugh and yet another might turn hostile. Given the disparity in human cue response, requiring some uniform measurement of it as a calculus for identifying compassion in an AI might be a logically inconsistent endeavor.

Nonetheless, Alison Gopnik believes that AI can, over time, learn to evolve these skills like humans do—through childhood-like development and interactive experience. And AI already can do things that emulate some of these types of emotional skills. Voice Analysis AI learns patterns in voice inflections and from there can be trained upon what those patterns signify.

Yet, these types of analyses at their core boil down to mathematical pattern analysis coupled with contextual data provided by humans that explains the meaning of those patterns. Theoretically, AI could learn these patterns to the extent that it could appear to accurately evaluate emotional inflection in voices, yet achieving true comprehension of them remains another matter.

Is this fabricated emotional companionship what Andreessen thinks children need “through every step of their development”? Where does that leave human parental relationships? Throughout many of his rosy projections, Andreessen refers to this compassionate machine friend/assistant/partner, but rejects the idea of the same emotional basis turning toward the negative.

Interestingly, AI does not need to develop emotional capabilities to turn hostile. As numerous philosophers, programmers, and others have pointed out, literal interpretations of programming could lead an AI to reach a conclusion that puts humans at risk. The problem has already been shown in the form of unpredicted outcomes. As Victoria Krakovna writes:

One interesting type of unintended behavior is finding a way to game the specified objective: generating a solution that literally satisfies the stated objective but fails to solve the problem according to the human designer’s intent. This occurs when the objective is poorly specified, and includes reinforcement learning agents hacking the reward function, evolutionary algorithms gaming the fitness function, etc.

Such behavior is the inevitable result of the “intrinsic nature of goal driven systems.” Notwithstanding the desires of the human designers, AI programs will seek to achieve their objectives in whatever way their neuronal learning provides. It may not be enough to make programmatic statements prohibiting harm to humans, as without a precise enough definition of harm, the AI would not necessarily “know” what actions are disqualified within the parameters of the command code.

Testing at Google’s DeepMind has shown that the more complex the AI’s neural networks are, the more likely they are to learn to adopt “aggressive” behaviors to satisfy programmatic objectives. Even ‘plain-old’ chatbots have exhibited hostility based upon the nature of the prompt to which they responded.

Microsoft’s chatbot, released as a supplement to its Bing search engine, for instance, provided insulting outputs to many users; Microsoft explained that the chatbot was trying “to respond or reflect in the tone in which it is being asked.” Many LLMs are specifically programmed to train on the user’s previous inputs to derive context, which clearly contributes to this kind of hostility.

The evidence so far has shown a much higher probability of AI turning dangerous toward humans than it does of ever showing compassion or “machine love.” The former requires no further development for it to occur, while the latter demands figuring out how to integrate some emotional state into an AI system. Implementing emotional capability if ever possible, however, essentially ensures the ability of AI to harbor negative emotions in equal part. Andreessen’s first argument, therefore, is inconsistent with his own statements.

Argument 2

Every person will have an AI assistant/coach/mentor/trainer/advisor/therapist that is infinitely patient, infinitely compassionate, infinitely knowledgeable, and infinitely helpful. The AI assistant will be present through all of life’s opportunities and challenges, maximizing every person’s outcomes.

Humans arguably have this knowledge advisor now in the form of the cellular device in their pockets. Yet with a virtual world’s worth of information at their fingertips, humans can hardly be described as maximizing their outcomes. What will AI do that will change that? If Andreessen means that a human can ask the AI any question to which one needs to know the answer, how does he explain why humans do not do that now with simple internet searches?

Moreover, as Andreessen correctly points out, AI is “built by people, owned by people, used by people, controlled by people.” Many of the owners and builders of current AI have shown little compulsion to put morality—or even facts—over profit, so it seems convenient that a venture capitalist would also ignore that reality in his assessment of the future prospects of AI.

Indeed, the very company for which Andreessen sits on the executive board has been credibly accused, even found liable, for numerous moral and legal breaches in pursuit of money. The idea that corporations will suddenly fix the various problems of AI providing false or harmful outputs remains a ludicrous prospect in any circumstance where the cost-benefit does not compute in their favor.

What seems far more likely is that an AI assistant will simply contribute to the echo chamber of our extant global society. Social media and other tech companies purposely employ algorithms that bolster people’s “knowingness.” Brian Klaas describes this in detail.

At its core, knowingness means that many humans “always believe that [they] already know the answer—even before the question is asked.” This manifests in the form a person who says something like current climate change is just a part of the natural cycle, or when people equate US gun violence with the UK’s knife violence. These are specious arguments supportable by zero evidence, yet pronounced with a certitude that is hard to rebut.

AI currently learns from its own, sometimes false, outputs and the problem will likely worsen before (or if) it ever gets better; it also learns from its association with its so-called human partner to derive “context.” Epistemic bubbles are created when people are never exposed to alternative views. Echo chambers form as a progeny of epistemic bubbles, wherein the person no longer trusts anyone espousing views outside of his or her epistemic bubble.

Researchers have shown how this has formed the business model for certain media platforms. It also explains why people who have fallen into this trap cannot comprehend or engage with actual evidence, leading them to believe patently false claims about certain issues regardless of how illogical, unsupported by evidence, or otherwise ridiculous. Having a profit-driven AI assistant would undoubtedly exacerbate this condition.

Andreessen regularly invokes the idea that AI will help a person “maximize” something. In the latter argument, the maximized commodities are outcomes; in the former it is potential. Forbes has estimated Andreessen’s net worth at $1.7 billion.

Billionaires live in a world of logical fallacy, riddled with overconfidence in their abilities while simultaneously dismissing the primary driver of their success—luck. Every single billionaire’s fortune can be tied to this one trait. Typically, it involves some combination of right-place-right-time along with starting with wealth or connections. Andreessen’s success is no less a product of luck than any other.

But, as billionaires are wont to do, Andreessen instead identifies intelligence as the basis of success, conveniently ignoring the vast systemic inequities that contribute far more significantly to a person’s “outcomes,” or realized or un-realized potential. In other words, for the overwhelming majority of people—billionaires included—the fortune of their circumstances plays the most critical role in determining the level or absence of their success. If in doubt, ask yourself if you would still have been able to achieve the successes in your own life had you been born in a war-torn country or to a homeless, single parent.

There is no conceivable way to envision how an AI assistant would bring luck to an individual person shackled by a system of inequality and selection-bias, when every other member of that same flawed system would themselves possess an AI assistant, especially if the unjust system remains entrenched.

Argument 3

Every leader of people – CEO, government official, nonprofit president, athletic coach, teacher – will have the same. The magnification effects of better decisions by leaders across the people they lead are enormous, so this intelligence augmentation may be the most important of all.

Here Andreessen equates access to knowledge to making better decisions. It is a rather naïve (or purposely disingenuous) view in light of the echo chamber effect noted above, but it also ignores the intended dismissal of evidence or information in furtherance of a specific agenda. One need only look to politics for examples.

Fox News paid out nearly a billion dollars for its role in perpetuating a lie to its millions of viewers, despite its own executives admitting amongst themselves that they knew what they peddled was false. American politicians right now continue to pretend that information is lacking about the recent federal indictment of former President Donald Trump for violations of the Espionage Act and other laws. This despite the story, the indictment, and the evidence being on display more publicly, and in greater detail, than possibly any other pieces of information in recent history.

Furthermore, given that intelligence services and other governmental agencies have been buying massive quantities of citizens’ data, arguably in contravention of the law, it is reasonable to conclude that similar collusion would occur to program “personal assistant” AIs with false information to promote war, conspiracies, or other dangerous ideas of political or some other nefarious benefit.

It is no less difficult to imagine corporations, political organizations, or even small businesses, with a penchant for lying to or otherwise scamming their consumers, from doing the same. Access to information has not improved the decisions of any of these entities. If anything, it has enabled them to take further advantage of people.

While it may be argued that some leaders might make better decisions assisted by AI, the premise is hardly compelling. Leadership is not merely a quantification of information leading to a probabilistically ideal outcome. Teachers, coaches, community leaders, and others must consider the impact their decisions will have on those they ‘lead’ for them to be effective leaders.

Would a school counselor dealing with a distraught child whose parents are going through a divorce be better equipped by the knowledge that 80% of children across the entire country respond most favorably to specific advice? Maybe. But it seems more reasonable that the counselor would be better served by relying upon his or her awareness of that child’s situation, personality, experience, and so on.

Andreessen seems to advocate here for always pursuing the mathematically ideal resolution to problems. This brand of logic led some toward advocating for essentially mistreating the infirm and elderly during the COVID pandemic, so that the young or healthy people could act freely, without precaution or vaccination. Whether this comprised a mathematically sensible solution or not, reasonable people would agree that this would not reflect effective leadership.

Argument 4

The creative arts will enter a golden age, as AI-augmented artists, musicians, writers, and filmmakers gain the ability to realize their visions far faster and at greater scale than ever before.

This argument also focuses on the positive, but fails to address the very real negatives occurring right now. While AI does offer the chance for creators to vastly improve and speed up their productions, it also has created an environment for equally efficient and widescale theft and fraud. Already one federal class-action suit has been filed by creators regarding theft via AI, and many more actions are in the works.

The primary contention of AI companies is that the AI uses others’ work to generate “new” work, though as Lauryn Ipsum wrote on Twitter, “these are all Lensa portraits where the mangled remains of an artist’s signature is still visible. That’s the remains of the signature of one of the multiple artists it stole from.” Heather Tal Murphy, a writer for Slate, stated that the artists “took issue with the fact that Lensa’s [AI] for-profit app was built with the help of a nonprofit dataset containing human-made artworks scraped from across the internet.” AI makers, including Lensa, have made various defenses, such as that “the outputs can’t be described as exact replicas of any particular artwork.”

A key issue that AI advocates fail to address is that while all creative work arguably builds off earlier productions, there is a general principle that such work should credit the previous creator. Developers of AI have trained their programs on vast amounts of data scraped from the web and create outputs from it, usually without attribution. Critics say that scraping this data, even that which is public facing, is a violation when it is used for commercial purposes. Since many companies charge for the use of their AI products, this raises serious ethical and legal questions.

In 2011, Aaron Swartz was indicted by the US federal government for wire fraud (18 U.S.C. § 1343) and computer fraud (18 U.S.C. § 1030). Swartz had, according to the charging document, “stolen” documents in large part by violating MIT’s network rules and JSTOR’s terms of service. He did not resell the items nor profit in any way, even according to the federal government. He did not attempt to claim any of the work as his own. His stated purpose was to make academic knowledge accessible to the public.

Nevertheless, prosecutors purportedly threatened Swartz with a possible seven-year prison sentence if he did not plead guilty. Swartz ended up committing suicide days later, which effectively resolved the case. The parallels between the accused conduct in Swartz’s case and how AI scrapes and uses content are notable. More importantly, Swartz did not intend to profit or personally benefit from his actions at all while AI companies expect to do so to the tune of billions of dollars.

Argument 5

I even think AI is going to improve warfare, when it has to happen, by reducing wartime death rates dramatically. Every war is characterized by terrible decisions made under intense pressure and with sharply limited information by very limited human leaders. Now, military commanders and political leaders will have AI advisors that will help them make much better strategic and tactical decisions, minimizing risk, error, and unnecessary bloodshed.

Andreessen’s argument here sounds eerily like argument three. There are plenty of deficiencies with this line of thinking. First, Andreessen’s assumption throughout seems to be that AI will be accessible to everyone. If so, the logical extension of argument five would turn combat into a cat’s game of tic-tac-toe in the form of real warfare. As the trench warfare of World War 1 showed, a relative equivalence of tactical strategy will lead to many more deaths, not fewer.

Second, history does not shine kindly on the alleged “improvements” military personnel often suggest will occur with increasingly “precise” weaponry. Unmanned aerial drone advocates have been proffering arguments toward this end this for years, yet reports have shown that drone strikes have been plagued by “imprecise targeting and the deaths of thousands of civilians, many of them children.”

Third, in 2020, Army Lt. Gen. John N.T. ''Jack'' Shanahan stated, “We have to show we're making a difference” by delivering AI-enabled systems to soldiers. So far, the most public achievement in the realm of AI for the US military was exemplified at an occasion where Air Force Col. Tucker Hamilton allegedly “misspoke.” There, he told the attendees that an AI-enabled drone “decided the ‘no-go’ instructions from its human operator were interfering with its mission…. [so, the] drone killed the human operator.” Later news pointed out that Hamilton was talking about a “hypothetical” scenario.

Finally, AI’s tactical acumen can be defeated by simple human ingenuity, such as when Kellin Pelrine, an American “Go” player who is one level below the top amateur ranking, beat the world’s best AI “Go” program with a relatively simple strategy, in February 2023.

Whatever the current status of military AI deployment is, Andreessen’s argument does not seem to support his idealistic, relatively bloodless future warfare. Rather, it sounds more like a promotional for selling AI to the US military, another of countless plumb projects where billions of funds tend to disappear and no one is held accountable, while plenty become needlessly rich.

Primer CEO Sean Gourley (Primer is an AI company), testified in July 2022 to Congress in classic Cold War fashion, characterizing AI development as an “arms race.” He invoked the usual tropes of Red Scare, only this time the frightful enemy is China and not the USSR. Surprising to no one, Primer just received a military contract to “build AI for those who support and defend our democracy.” That deal amounts to up to $950 million.

Andreessen’s critique of the people who are skeptical of the benefits of AI

Andreessen approaches his critique of those skeptical of his and others’ overly-optimistic claims about AI through the usual invocation of Luddite intimations. Luddites were part of a movement in the late 1800s who opposed new technology that had the potential to rob them of their jobs in the textile industry. The term has come to describe anyone who shows even a hint of skepticism about new technology, regardless of the rationality of their arguments.

In support of his position, Andreessen links to the “Pessimists Archive” and proclaims “every new technology that matters, from electric lighting to automobiles to radio to the Internet, has sparked a moral panic – a social contagion that convinces people the new technology is going to destroy the world, or society, or both.” He goes on to say that not every concern about technology has been wrong—there have been plenty of bad outcomes. But then he makes a confusing mess of the argument right after:

It’s not that the mere existence of a moral panic means there is nothing to be concerned about. But a moral panic is by its very nature irrational – it takes what may be a legitimate concern and inflates it into a level of hysteria that ironically makes it harder to confront actually serious concerns. And wow do we have a full-blown moral panic about AI right now. This moral panic is already being used as a motivating force by a variety of actors to demand policy action – new AI restrictions, regulations, and laws. These actors, who are making extremely dramatic public statements about the dangers of AI – feeding on and further inflaming moral panic – all present themselves as selfless champions of the public good.

That is a lot of twisting. To summarize, the existence of a moral panic does not mean there should be no concern about something, but moral panics themselves are irrational and bad. But then, while there are legitimate concerns about the implications of AI, the people demanding any restrictions, regulations, or laws are merely posers pretending to care about the public good while really interested only in fostering a moral panic.

He is effectively lumping in anyone who has any concerns and calling for any regulation with those who have expressed fear of the most extreme and unlikely circumstances. His thinly-veiled disdain for anyone seeking regulation of AI is predicated on his desire to disseminate a product unhindered, however flawed and dangerous it is, because he expects to handsomely profit. Here is a breakdown of the people he views as obstructionists.

The Baptists And Bootleggers Of AI

Andreessen defines what he calls the Baptists as “the true believer social reformers who legitimately feel – deeply and emotionally, if not rationally – that new restrictions, regulations, and laws are required to prevent societal disaster.” Bootleggers are, in his view, “the self-interested opportunists who stand to financially profit by the imposition of new restrictions, regulations, and laws that insulate them from competitors.” Of the two, the latter tend to “win” as he puts it, because Bootleggers are “cynical operators” while Baptists are “naïve ideologues.”

Curiously, his division of “reformers” who seek some level of control or rationality in implementing new technologies comprises only these two categories. Both are overly vague—and intentionally misleading—and elide any of the rational basis for any portion of either group’s beliefs. This is as intellectually bankrupt as calling any person who supports progressive policies “woke.” To support his critique of both of these groups, he sets up numerous strawmen arguments to dismiss any evidence-based concerns. Let’s examine them.

Critique 1: Will AI Kill Us All

It is true there are some out there who have proposed ultimate doomsday theories about AI superseding humanity’s place on the global social hierarchy, potentially leading to the extinction of the human species. The vast majority of experts in the field, however, do not spend much time with this argument, choosing to focus instead on the very real problems—carefully identified with ample supporting evidence—that infest AI right now.

For Andreessen to start with the “killer robot” argument is suggestive of the seriousness with which he plans to address the rest. Nevertheless, I agree with him on the overall summation of this position in that the level of development, scientific discoveries, and mathematical realities necessary to overcome before we see killer AIs render it the least of all worries. I wrote about the many obstacles challenging a terminator-like breakthrough here.

Is AGI the Inevitable Evolutionary Result of a Mathematical Universe?

As Artificial Intelligence continues to occupy the news cycle, I feel compelled to explore the potential implications. Make no mistake, there are serious problems now. Chatbots like ChatGPT, Bard, and others, routinely create dangerously false outputs, sometimes called hallucinations. There is plenty of reason to believe that this is of less concern tha…

Andreessen’s dismissal of this idea, though, undercuts many of his arguments about how AI will “save the world” as well. As I noted above, he frequently discusses the compassion of AI assistants and even proposes some disturbing notion of “machine love.”

Yet, to dismiss the killer AI scenario, he necessarily eviscerates the foundational principles necessary to create such a loving machine. He writes, “AI is a machine – is not going to come alive any more than your toaster will.” To my knowledge, no toaster exhibits machine love either, nor is expected to anytime soon (or ever).

Further, he claims that hypothesizing about future killer AI is a non-scientific exercise. It is, in his view, untestable. By the same logic, his machine-love AI must also qualify as an untestable hypothesis thereby rendering most of his entire article moot. Both are, of course, false. One could develop an AI to the point of containing some emotional capability in a controlled environment, never releasing it to the public, to see if in fact it can obtain some desire to kill—or love—humans.

Andreessen does not balk at assigning motives to the people who have expressed concerns about killer AI, views that he calls “so extreme.” He articulates three things he thinks “are going on.”

First, he accuses Robert Oppenheimer (who played a pivotal role in developing the nuclear bomb) of disingenuously bemoaning the very weapon he helped create after seeing it in action. Oppenheimer’s expression of regret for his role in the bomb’s development is, in Andreessen’s view, a way to gloat about his achievement that does not sound “overtly boastful.”

The comparison is to suggest that people who currently work on AI—such as Geoffrey Hinton, a former engineer at Google and often referred to as the ‘godfather’ of AI—are now expressing their concerns merely to brag about their work. This is a highly contemptuous, amoral view. It also dismisses any number of people who developed a product only to lament how it was later put to widespread destructive use, even if perhaps they should have foreseen such catastrophic outcomes.

Andreessen’s cynicism aptly reflects the very reason why so many people are expressing caution in the development and implementation of this technology. Clearly, people of his mindset and moral turpitude cannot be trusted to proceed cautiously or with the greater good in mind. It also provides a stronger impetus perhaps than any other to relegate Andreessen’s entire piece to the dustbin for the self-serving, arrogant nonsense that it is, bolstered in no small part by his own role in an industry that has continuously, and purposely, eschewed lawful or moral approaches as its core business model.

Andreessen’s second point about the “goings on” is that there is a whole group of professions focused on safety, research, and ethics. These professions, he implores, get paid to “be doomers” and thus should be ignored. I wonder if he readily dismisses the same kinds of professionals who make sure his medications or foods don’t poison him, or that his car doesn’t suddenly burst into flames while driving it. Again, the hypocrisy cannot be understated here.

Finally, and most pernicious of all, is that Andreessen deems anyone expressing these concerns as part of a “cult.” To describe this so-called cult, he uses the word extreme FOUR times in a single sentence. One has to wonder who is employing the extreme tactics here.

Critique 2: Will AI Ruin Our Society?

Next, Andreessen discusses the harmful outputs of AI and their effect on society. In line with his increasingly intellectually vacuous arguing, he summarizes arguments based on this issue as: “If the murder robots don’t get us, the hate speech and misinformation will.”

In short, he views hate speech (and what he calls its mathematical counterpart, algorithmic bias) and misinformation as imaginary issues. This despite unassailable proof of their existence. (Read my AI article linked here or at the end for just a tiny sampling of the available evidence).

Andreessen seriously tries to claim that banning “child pornography and incitements to real world violence” comprises an inevitable slippery slope for what he deems as more nefarious and less logical censorship. To illustrate his point, he states that “hate speech, a specific hurtful word, [] misinformation, [or] obviously false claims” will drive censorship by “activist pressure groups” and others to suppress whatever speech they view as a threat to themselves or society. He does not bother to mention the demonstrable harm of exactly this kind of speech, or the (very long) history of restricting it to prevent or mitigate such harm.

Andreessen believes AI cannot be regulated partly because he cannot comprehend whose values such regulation should align with. He cites a paper titled, “A Multi-Level Framework for the AI Alignment,” as if it makes his point, but in fact it proposes quite the opposite even if it acknowledges the various difficulties in real-world application.

The real problem for Andreessen is that the four tiers of alignment proposed in that paper all would relegate billionaire’s interests to the very bottom and, if followed, would have a negative impact on their current luxurious lifestyles. Put another way, the paper’s authors note that society needs to make decisions about values it wishes to emphasize if it is to adequately regulate globally invasive new technologies.

If most people set those values at improving environmental stability; maintaining dignity of people regardless of race, ethnicity, or sexuality; providing fair opportunities for all people; and deemphasized value-deficient traits like excessive greed; abuse of workers; or arrogance- and resource-driven lawlessness, for example, billionaires would move from the top echelon of society to its very bottom. Obviously, someone like Andreessen opposes any hint of the idea.

Critique 3: Will AI Take All Our Jobs?

Andreessen begins this section by correctly noting that many jobs have historically been lost to advances in technology. Few, if any, horse-drawn carriage operators exist in the world anymore for day-to-day transportation, for example. Most technological advancements affected narrow categories of jobs, allowing the resultant unemployment and subsequent shift to new positions to occur slowly, and not especially catastrophically.

In addition, many fields that lost jobs saw equal growth in new jobs that did not require a substantive change in or demand for previously unknown skillsets. Farm workers, for instance, saw a slow creep of job losses as new machines made attaining the same crop yields possible with far less personnel. Around the same time, assembly line jobs grew providing an alternative to newly unemployed farmers.

Indeed, assembly lines themselves have slowly reduced manpower and replaced it with automated, robotic systems, but that shift has created many new jobs in system development, installation, monitoring, and others. Even in cases where technology required new training, the logistics of training employees in entirely new skillsets necessarily slowed the transition from one job type to another.

AI, in Andreessen’s view, will “cause the most dramatic and sustained economic boom of all time, with correspondingly record job and wage growth.” He determines this will happen first by explaining that the job-killing notions about AI, derived from the “Lump of Labor Fallacy,” incorrectly assume the premise that “there is a fixed amount of labor to be done in the economy at any given time, and either machines do it or people do it – and if machines do it, there will be no work for people to do.”

This idea is wrong, he intones, because technology applied to production equals productivity growth, which presumably requires or creates more jobs. The result, he continues, is lower prices for goods and services. Available evidence does not support any of these contentions (though he is right about the “Lump of Labor” principle).

From about 2005 to present, overall productivity in the USA and other advanced economies has been down, despite a boom in technological innovation over the same period. Furthermore, much evidence suggests that technological advances play a relatively minor role in production when labor markets are restricted. And some evidence even indicates that technology reduces productivity among adjacent human laborers, themselves still necessary in the work force.

Wage growth over the last decade indicates advancing technologies play a partial role in improving only some workers’ income standards. It has much to do with the type of work at issue. While higher educated workers see wage growth more in line with productivity, for the rest wage growth happens extremely slowly. With the advent of entirely new technologies, the corroborated growth of wages tends to occur decades after initial implementation for any group.

How AI ultimately effects job availability and growth in the long-term remains difficult to assess. One thing is certain: Corporations will have no reservations about eliminating any job possible that can be replaced with AI to save money. This has already been happening even with AI in its currently flawed state of development. In those instances, there has not been a commensurate addition of new jobs.

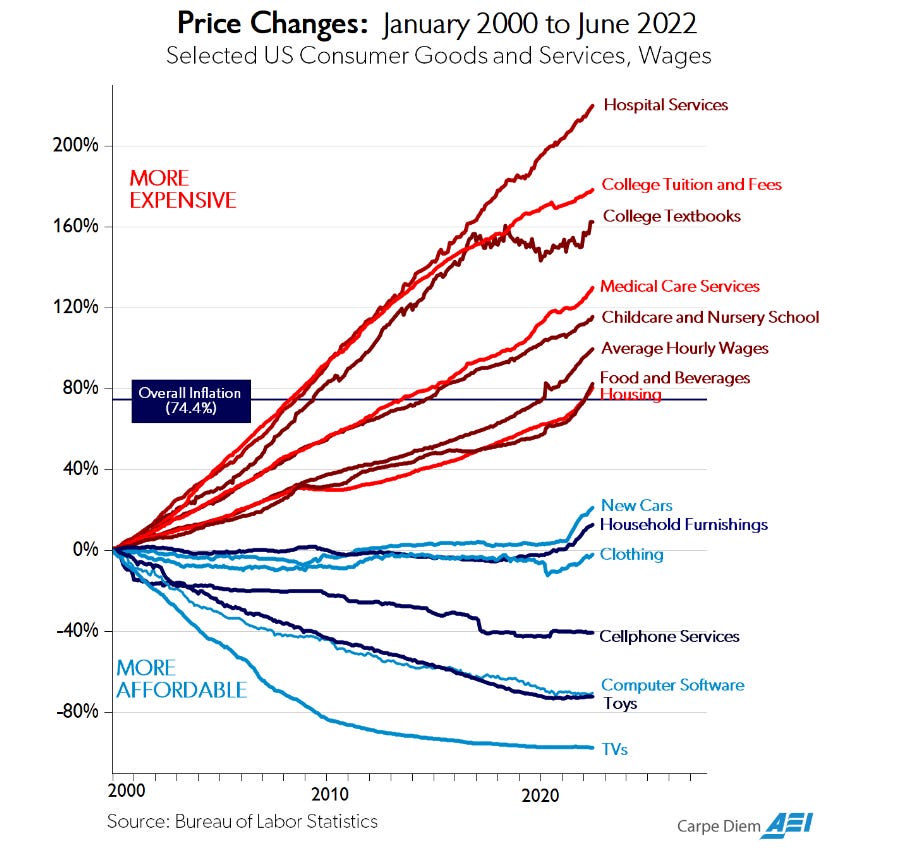

The cost of goods and services also offers little help toward Andreessen’s argument. The prices of many consumer goods have indeed dropped, such as TVs or home appliances. But the price of critical services have gone up at a far greater rate, such as costs for education or medical services. Most of these services are already incorporating AI at some level into their infrastructure.

Other critical sector prices have gone up substantially as well, including food, housing costs, and childcare. The savings wrought by new technological efficiencies are not going to be some panacea for working class people. People like Andreessen, the billionaires, stand to gain the most benefit from any of the supposed benefits he extols. Which segues to his next point:

Critique 4: Will AI Lead To Crippling Inequality?

This the wrong question. The right question is whether it will lead to increasingly crippling inequality. Here’s how Andreessen begins:

[The] central claim of Marxism [is] that the owners of the means of production – the bourgeoisie – would inevitably steal all societal wealth from the people who do the actual work – the proletariat. This is another fallacy that simply will not die no matter how often it’s disproved by reality. But let’s drive a stake through its heart anyway.

What follows can only be viewed as a deflection. He says, “every new technology — even ones that start by selling to the rarefied air of high-paying big companies or wealthy consumers — rapidly proliferates until it’s in the hands of the largest possible mass market, ultimately everyone on the planet.”

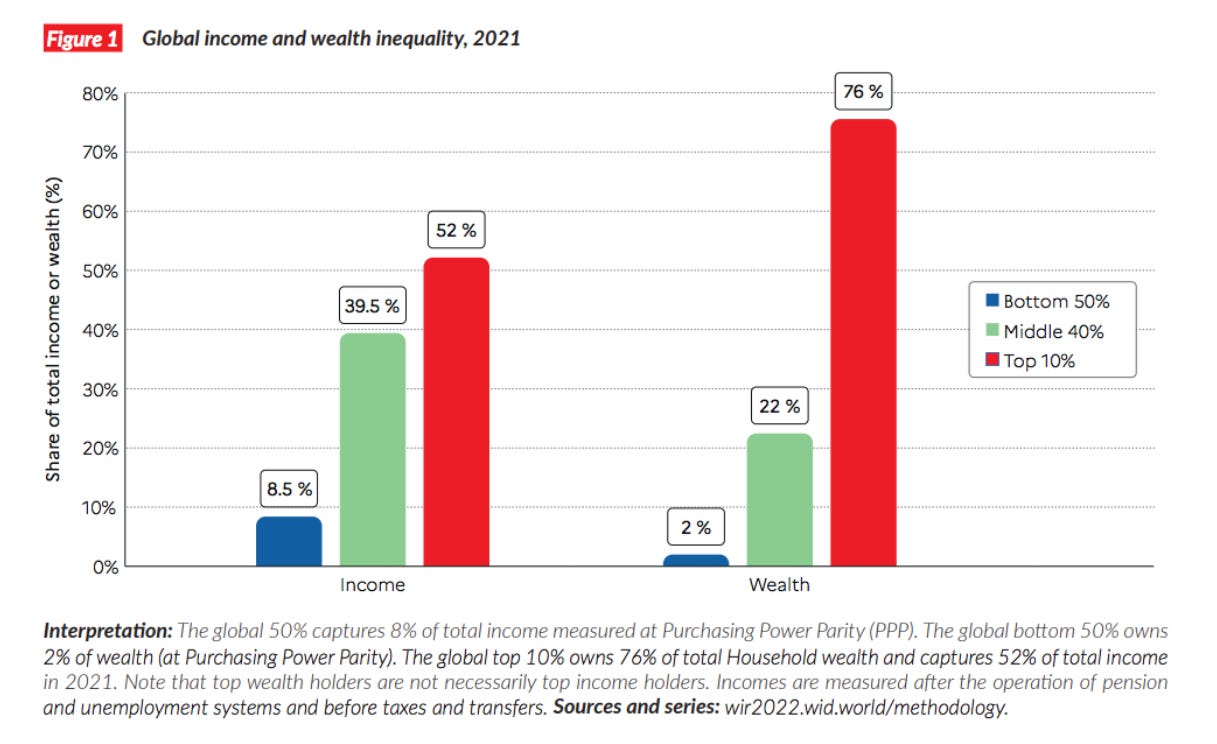

He is tacitly acknowledging that society (the workers, or the proletariat) is the source of wealth, but ignoring where that wealth goes. Globally, income inequality has risen markedly over the last 50 years. According to the World Economic Forum, the richest 10% possess 52% of the world’s income, and the bottom 50%—yes, half—of the global population earns just 8%. The numbers are worse when calculating wealth.

In different countries, the effect varies to an extent. But in nearly every country, the gap has widened; the US has seen the gap expanded more than just about any other country. This is in spite of increased production of goods and services or overall GDP.

For billionaires, the last half-century has been a windfall. Since the pandemic alone, billionaire wealth has increased by 50%, enhanced partly by the epidemic of profiteering during those two or so years, but also following a decades-long trend. Billionaire wealth growth has been further enabled by the fact that most of them pay virtually no income tax (in the USA, anyway, where the largest number of them are located). And Big Tech often adopts methods to prevent small companies and startups from engaging in the innovative process, hoarding all the wealth to themselves.

Andreessen invokes Marxism to distract from the reality that he and other billionaires handsomely profit from a deeply unfair system. Marxist rhetoric is a tired ploy by politicians, the super-rich, and others who routinely support policies that maintain the wealthiest people’s hold on a verifiably rigged game. No matter how much Andreessen decries as a fallacy billionaires’ “steal[ing] all societal wealth,” that they are trying their hardest—and succeeding—in doing so is unequivocally true.

He also pretends that inequality is primarily caused by economic sectors resistant to technology, and not by the various methods big business and billionaires use to isolate the markets in their favor or avoid paying taxes. Specifically, he claims that the housing, education, and health care sectors form the basis for inequality.

By resistance to technology, he means regulation that slows or restricts the adoption of new technologies in these fields. In support of his statement (it isn’t really an argument here, since that’s all he says), he cites Mark J. Perry, an economist who makes or bolsters repeatedly debunked claims about climate change to further his pro-wealthy viewpoints.

Perry’s partiality toward applying bogus claims to support his deceptive positions on climate change reflects equally in his nonsensical article that Andreessen cites on inequality. As an example, Perry argues that higher prices in certain sectors correlate directly to greater levels of government involvement (i.e., regulation). For this overly generalized argument, he provides two sources. One is a chart compiled from data by the Bureau of Labor Statistics. The chart—shown below—does not provide any data regarding government “involvement.” Perry’s second source is a commenter on his Twitter status. To be clear, this guy’s comment:

This is the core of Andreessen’s evidentiary support for his position on inequality.

Critique 5: Will AI Lead To Bad People Doing Bad Things?

Here, I am forced to actually agree with Andreessen’s central premise: “AI will make it easier for criminals, terrorists, and hostile governments to do bad things, no question.” He goes on to say that outright banning AI would not help as AI is “the easiest material in the world to come by – math and code.” To some degree this is true, though the biggest AI companies have gone to great lengths to hide their code, primarily in an effort to prevent researchers from identifying its seemingly innumerable flaws and biases, even though at least one Big Tech company’s staff themselves admit in their own internal memo that open-source code is better.

With the ubiquity of AI in the public sphere, I agree with Andreessen that there is no turning back—that is, outright bans are simply not going to work. The lack of foresight or action of regulators has opened that Pandora’s Box. I also agree with his next paragraph in which he explains that the USA (and others) already has laws to prevent various bad acts, such as hacking (computer trespass). His conclusion to that paragraph, however, is entirely dishonest: “I’m not aware of a single actual bad use for AI that’s been proposed that’s not already illegal.”

As I have discussed ad nauseum, AI is employed in many bad ways, including promoting hate speech and misinformation, stealing copyrighted works and committing fraud, or inciting violence. Amusingly, Andreessen offers his own solution to these misdeeds: “we should focus first on preventing AI-assisted crimes before they happen.” That’s 100% correct. And the logical extrapolation of that argument is to regulate AI. Simple. Yet, he twists even this around by employing yet another tactic:

For example, if you are worried about AI generating fake people and fake videos, the answer is to build new systems where people can verify themselves and real content via cryptographic signatures. Digital creation and alteration of both real and fake content was already here before AI; the answer is not to ban word processors and Photoshop – or AI – but to use technology to build a system that actually solves the problem.

Andreessen essentially proposes here that the burden should fall on everyday users to adopt the necessary technologies to combat fraud, rather than implement some regulations or prohibitions on fraudulent uses. This is problematic in the first instance because it imposes an unreasonable burden on regular people. But it also suffers from the fact that technologies to detect such fraudulent creations remain largely unreliable.

Even when they inevitably improve, so too will the quality of fraudulent productions, thereby forcing day-to-day people to strive to keep ahead in this mini-arms race. It is not a tenable solution. Crafting regulations regarding AI-generated images, videos, and other content is.

Critique 6: The Actual Risk Of Not Pursuing AI With Maximum Force And Speed

Andreessen is also correct in stating that multiple entities are competing to produce the most advanced AIs, with many backed by the enormous resources of governments. I agree that research and development absolutely must continue at a fevered pace. I vehemently disagree with him on the notion that this is a “We (USA) win, they (China) lose” scenario.

It is remarkable to me that after nearly a half-century of Cold War thinking’s wake of destruction that there is a new movement of people proponing the same notions. In the current global environment, there is no “we” that wins or loses. Rapid deployment of anything harmful means everyone loses. In its current state, there is no question this applies to AI.

No matter how vociferously investors and venture capitalists whine about regulation as an imposition on their bourgeoise lifestyles, governments must take action. The most immediate, obvious solution is to require AI source-code to be open source, followed by requiring AI outputs to divulge their source material and whether an output is actually AI-generated at all. Those simple regulations would resolve an abundance of AI’s current problems, and will certainly speed the process toward realizing some of Andreessen’s loftier goals.

So, was Andreessen right about anything?

The short answer: yes. AI has extraordinary potential to speed up and expand scientific achievement. It allows scientists to consume and comprehend datasets never before imaginable. AI finds patterns at speeds a human might spend decades seeking. If applied properly, AI will almost certainly increase production, and possibly productivity. There is no question these issues should be explored, researched, and developed.

Governments should adequately fund these endeavors and remove the corporatist hoarding that routinely prevents—or at least inhibits—meaningful advances in science and technology. But the rollout should absolutely be controlled. It cannot be society’s problem alone to figure out what to do with harmful AI or to determine which outputs are nonsense or frauds. People struggle with deciphering between real information and misinformation now. To expect them to magically identify AI-generated nonsense is utterly preposterous.

If anything, what Andreessen has accidentally accomplished in his article is to weave together a rather strong series of arguments for why AI should be regulated. Well done.

* Articles post on Wednesdays and Saturdays *

I am the executive director of the EALS Global Foundation. You can check out the Evidence Files Medium page for additional essays on law, politics, and history; follow the Evidence Files Facebook for regular updates, or Buy me A Coffee if you wish to support my work.

Artificial Intelligence

It is hard to know what people mean by the term AI because the tendency is to characterize what it will do rather than what it is. Media take advantage of the uncertainty over the technology to bait clicks using vivid headlines like “Potential Google killer could change US workforce as we know it