Is AGI the Inevitable Evolutionary Result of a Mathematical Universe?

At the moment of Singularity, are we doomed, immortal, or is this all nonsense?

Visit the Evidence Files Facebook and YouTube pages; Like, Follow, Subscribe or Share!

Find more about me on Instagram, Facebook, LinkedIn, or Mastodon. Or visit my EALS Global Foundation’s webpage page here. Be sure to read the other articles in this series.

As Artificial Intelligence continues to occupy the news cycle, I feel compelled to explore the potential implications. Make no mistake, there are serious problems now. LLMs like ChatGPT, Bard, and others, routinely create dangerously false outputs, sometimes called hallucinations. There is plenty of reason to believe that this is of less concern than making money from these programs (see my article on ‘lying’ AI here, or Microsoft’s Mikhail Parakhin’s tweet about it). Some companies are using these seriously flawed systems to replace employees, sometimes out of the mistaken belief that it will save money through efficiency, and other times out of what looks like a backlash against employees seeking fair treatment and wages. There are ample instances where the decision to uncritically employ AI has endangered peoples lives or health. The real problems with AI need redress, starting with regulation. Though, given the public statements in various technology hearings by US Congressional officials, it is not likely that any regulation will effectively stem the tide of morally untethered deployment.

All this dreariness aside, I do enjoy pondering the potential implications of AI over the long-term. We hear a lot of predictions, good and bad, but few ever describe in detail how they reach them. I am no sci-fi writer, so I instead want to explore these ideas in view of the available science, philosophy, mathematics, and other fields. Some of this theorizing is speculative, meaning we can’t do it… yet. But, there are conceptual and even practical developments that lend the chance of eventually turning some speculation into reality. My goal is to put in perspective some of the problems that would need to be conquered to create some level of dangerous, robot-bodied intelligence. In this article, I refer to this futuristic AI as Artificial General Intelligence (AGI), following the term’s usage by OpenAI. By that I mean AGI that “demonstrate[s] broad capabilities of intelligence, including reasoning, planning, and the ability to learn from experience, and with these capabilities at or above human-level,” ensconced in a physically superior robotic body.

***

There are some who question whether “our physical reality could be a simulated virtual reality rather than an objective world that exists independently of the observer.” The principle is not new, even if its imagined computerized rendition is. Tibetan and Indian philosophers long ago divided reality into two truths. According to them, conventional truth consists of unreal phenomena that appear to be real because ordinary beings’ cognitive processes apprehend them incorrectly. Ultimate truth is reality that transcends any mode of thinking and speech, one that only enlightened figures can properly apprehend. For these philosophers, our “simulated virtual reality” would reflect modern-day conventional truth. That is, the perception of everyday folks of what is real is simply a stream of errors committed by our faulty cognitive processes. Enlightened beings would recognize this and see the simulation for what it is, thereby grasping ultimate truth.

Melvin Vopson of the University of Portsmouth says that under the theory that our existence is a virtual reality, real reality is “pixelated,” comprised of parts of a minimum size that cannot be subdivided further: i.e., bits. Vopson identifies several features of our perceived reality that could directly correlate to a virtualized system:

The speed of light = maximum processing speed

Mathematics = computer code

Subatomic particles = bits

Vopson further points out that the quirkiness of quantum mechanics bolsters the notion that nature isn’t “real.” More precisely, nothing in the quantum-level world is definite until you purposefully observe or measure it. And even then, knowledge is fleeting because immediately after a measurement is made, the system “collapses” into its eigenstate. In other words, once a measurement is made (be it of energy or momentum), the uncertainty of the remaining value increases. Nevertheless, in his quest to test the simulation theory, Vopson went so far as to calculate the amount of information stored within all the elementary particles of the universe. He writes,

Let us imagine an observer tracking and analyzing a random elementary particle. Let us assume that this particle is a free electron moving in the vacuum of space, but the observer has no prior knowledge of the particle and its properties. Upon tracking the particle and commencing the studies, the observer will determine, via meticulous measurements, that the particle has a mass of 9.109 × 10–31 kg, charge of −1.602 × 10–19 C, and a spin of 1/2. If the examined particle was already known or theoretically predicted, then the observer would be able to match its properties to an electron, in this case, and to confirm that what was observed/detected was indeed an electron. The key aspect here is the fact that by undertaking the observations and performing the measurements, the observer did not create any information. The three degrees of freedom that describe the electron, any electron anywhere in the universe, or any elementary particle, were already embedded somewhere, most likely in the particle itself. This is equivalent to saying that particles and elementary particles store information about themselves, or by extrapolation, there is an information content stored in the matter of the universe.

This analysis, however, only examines information from the perspective of content stored within specific particles that make up the observable universe. But, as Vopson notes, information could be stored on the fabric of space-time itself. This latter proposal is partially coped with under the holographic principle; it proclaims something like, “the three-dimensional world of ordinary experience—the universe filled with galaxies, stars, planets, houses, boulders, and people—is a hologram, an image of reality coded on a distant two-dimensional surface.” Physicists developed the holographic principle in an attempt to resolve the information paradox present in black hole informational entropy.

If the whole of existence is a mere facile representation of an underlying binary code, and Vopson’s calculation of the sum of informational content in the known cosmos of elementary particles is correct, a critical question is whether an AGI domain (in the taxonomic sense) could in fact access this library in the form of a Deep Reinforcement Learning dataset. To illustrate, Vopson calculated this amount as: Nbits = Ntot × Huqdqe = 6.036 × 1080 bits. The result is 6.036 hundred quinvigintillions. This figure represents the low end, as the universe most likely contains more information, stored in a variety of formats for which Vopson’s study did not account, such as anti-particles, neutrinos, and bosons. The actual total may exceed 10120. Could this sum of information ever be computed?

Currently, the quantum speed limit—if there is one—is unknown. Researchers continue working on ways to increase the overall speed of quantum calculations, but the theoretical maximum remains disputed. For now, the presumed cap depends on the availability of energy and memory in the system, as well as the universal constant—the speed of light. Unfortunately, determining a prescribed limit to quantum computational speed is not as simple as deriving the number of possible calculations per a set period of time (say, one second). Quantum computation limitations are set by other factors, such as von Neumann entropy, maximal information, and quantum coherence.

Nevertheless, whatever the speed limit of a single processing unit is it may not be a major restriction for long. Researchers are working on what they call multiagent systems, where numerous autonomous units work in coordinated teams to engage in reinforcement learning and achieve objectives. Successful teams are chosen to advance while unsuccessful ones are eliminated. This selects for positive performance, and works something akin to accelerated evolution. Deep Reinforcement Learning bolstered by a viable communication network, such as through cellular communication, D2D communication, ad hoc networks, or others, might eventually enable a hive-minded, multiagent AGI system. Guojun He notes that the transmission and receiving system for such a collective mind must perform efficiently enough not to overburden the communication protocol, and to overcome latency and potential interferences. Moreover, He writes, “the communication system needs to comprehensively consider the relevance, freshness, channel state, energy consumption, and other factors to determine the priority of message transmission.” A critical element of this, according to He, is employing Age of Information (AoI) analysis. This measures the freshness, and thus the priority, of transmitted or received data. Network parameters might assist with discarding untimely information quantified under AoI to reduce the transmission load of spurious messages, thereby preserving network bandwidth.

Physicists and computer scientists are working on the idea of employing quantum entanglement as a potential alternative or adjunct to traditional communication protocols. Quantum entanglement overcomes any obstacle created by physical distance and time—and presumably bandwidth—which in any current wireless representation remains a considerable problem. When two subatomic particles are “entangled,” a measurement of one instantly declares the state of the other. Two entangled photons of light, for example, will possess opposite polarization—one positive, the other negative. Measuring one photon that is negative tells you that the other is simultaneously positive, no matter where in the universe it sits relative to the measured photon. One problem lies in that both photons remain in a state of superposition prior to being measured. This means that neither particle is distinctly positive nor negative before conducting the measurement of one of them. Scientists at MIT, Caltech, Harvard University, and others have successfully transmitted tiny pieces of information through entanglement, which shows that it could potentially work in a larger system, perhaps one operating in the macro world in the form of AGI interconnected neural networks. Communication across the entire population of AGI units, transcending space and time, could be possible using multipartite entanglement. Some have proposed this methodology as the explanation for the Fermi Paradox. Advanced species (whether AGI or otherwise) might not rely upon the archaic methods of communications we do, such as radio waves. We therefore don’t—or can’t—recognize any evidence of their information exchanges.

If it becomes possible to establish inter-agent communication at quantum speeds, calculation capability could be compounded by the number of agents in the system. As each would perform calculations based upon their individualized experience, the required processing speed would be less per autonomous unit. Yet, with the ability to exchange information instantaneously, all autonomous units would effectively share every other’s individualized experiential knowledge in addition to any pre-loaded datasets. Still, the required computational capability would be enormous. Consider that to calculate every bit of information present only in elementary particles using Vopson’s theorem, it would still take 2 x 1063 seconds to solve it using the Oak Ridge National Laboratory supercomputer in Tennessee, one of the fastest computers on Earth. At this speed, it would take 3.155 billion autonomous units 100 years to calculate the entirety of the universe’s elementary particles’ information, and perhaps centuries or millennia more to tackle the rest. Improved quantum computers would thus need to exceed this speed by orders of magnitude absent another solution (discussed below). The logistics of this entire hypothetical are, to put it mildly, incredibly daunting. But, there is more to the problem than speed.

Quantum computing also demands enormous amounts of energy. Chief among the problems is keeping the quantum processor environment cool. For example, Google’s quantum computer uses about 25 kilowatts to keep its processor at 15 millikelvin (-273°C) for its current 1000-qubit system. As a reference, the average U.S. household uses 10,715 kilowatt hours per year. Much of Google’s cooling goes toward the isolation of the basic element of the system—its qubits—to prevent decoherence caused by absorbing energy from another particle, which would influence its present state and cause errors. The scalability of Google’s system (or any thereafter) may require far more energy. Increasing the number of qubits in the system leads to higher error events, or information leakage. The current error rate is approximately 1 in 1,000 operations. Quantum algorithms require around 1012 operations each; a rate of 1 in 1,000 for that many operations equals errors numbering near a billion. So, the success of future quantum computing systems will depend on a cooling system capable of isolating qubits far more efficiently to reduce the error rate.

Energy problems seem more profound when considering how to structure individual AGI units’ internal processors. Even if quantum computing can be scaled up exponentially without drastically increasing the required energy to maintain the system, the development of the mechanics of an on-board cooling system for embodied AGI units seems very far off. Put another way, how would mobile AGI robot bodies sustain an on-board cryogenic cooling system that is fundamental to their ability to compute? Engineers might look to a cryogenic vascular system similar in design to the Active CryoFlux, but the idea seems to be in its earliest infancy, at best.

Making a dataset the size of the universe’s totality of information accessible to an AGI collective also seemingly would require incomprehensible storage. If it is correct that the sum of all information in the universe comprises between 1080 and 10120 bits, in order for AGI to subsume this data without having to continually recalculate it, it must be storable in a real-time, accessible way. The International System of Units currently recognizes the highest value of storage as the quettabyte, or 1030 bytes. While 1 byte consists of 8 bits, a quettabyte denotes nowhere near the amount represented by even the low end of the hypothesized total value of data in the universe. The largest data center in the world now is in Langfang, China, sitting on an area of over 6.3 million square feet—about the size of the Pentagon. There are over 7,500 data centers across the globe in 2023, and by 2025 the amount of global data is expected to reach only 180 zettabytes, well below even a quettabyte. The ratio of physical space to storage capacity will need to reduce by amounts that are difficult to imagine, or researchers will have to develop a completely different paradigm for data retention and access.

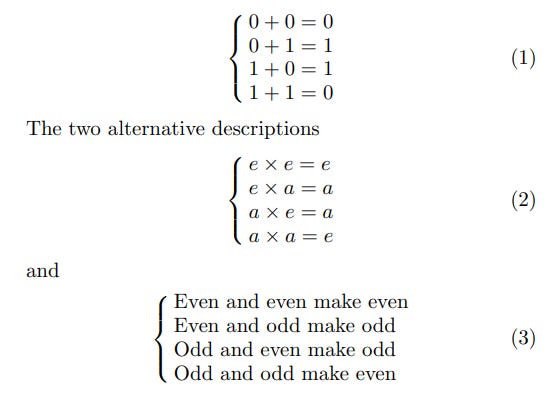

Returning to the computational speed issue, determining the limit matters, though perhaps less so, if the External Reality Hypothesis (ERH) turns out to be true. The ERH posits that a reality exists that is entirely independent of humans and their ‘baggage.’ Max Tegmark defines this ‘baggage’ as words or other symbols used to describe reality that suffer from the interference of preconceived meanings. He proposes instead to use a mathematical structure comprised of “abstract entities with relations between them.” He illustrates as follows:

While all three configurations correspond to an arbitrary designation (the number, letter, or word), the representations themselves denote exactly the same mathematical structure, which itself is not arbitrary at all. Tegmark reaches the following conclusions from this: 1) The ERH implies that a “theory of everything” has no baggage; 2) Something that has a baggage-free description is precisely a mathematical structure. Moreover, it is probable that the ERH mathematical structure to describe the universe should be smaller than the sum of its component parts. Tegmark writes,

The algorithmic information content in a number is roughly speaking defined as the length (in bits) of the shortest computer program which will produce that number as output, so the information content in a generic integer n is of order log2 n. Nonetheless, the set of all integers 1, 2, 3, ... can be generated by quite a trivial computer program, so the algorithmic complexity of the whole set is smaller than that of a generic member.

If the ERH correctly describes what we call reality, one must assume that eventually an algorithm will be found that calculates the entirety of it, but in an abstract way free of labels possessing preconceived meanings. Perhaps AGI itself is better suited to make the discovery than a human. Assuming the finite characteristics of this algorithm as suggested by Tegmark, the necessary computational capacity to adopt the entirety of existence’s information into a hive-minded AGI neural network may not require such exquisite speeds necessary to meet Vopson’s minimum or more. If transmissible through a networked, multipartite-quantum-entangled-information-sharing system, all AGI units would possess the entirety of the universe’s information at once. It seems, then, that AGI would not need to partake in the sluggish, problem-riddled manual ingestion of human intellectual achievement AI undergoes now because it could calculate every conceivable output from a compressed algorithm developed under the ideals of the ERH.

So what do we make of all this?

Assuming any of this is possible—a very heavy assumption indeed!—what does it foretell about the future of humanity? For starters, it would seem that the evolution of humanity would end, at least as we currently understand it. After all, how could the human brain—with its paltry 60 bps processing speed—independently compete with a hive-minded AGI capable of instant data sharing and unimaginably faster computational abilities? If the universe itself is solvable as a mathematical algorithm, meaning AGI could know everything that came before and will come after by virtue of its analytic abilities, there seems little left for humans to add to evolutionary progress. Even the uniquely human benefits of elevated consciousness would no longer provide an advantage. (Why this may be so is addressed in a forthcoming article). Moreover, a fully embodied AGI population of autonomous, yet collectively intelligent, agents would not suffer the frailties of the biological human condition. It would make no difference to them, therefore, whether the environment had degraded to virtually unlivable conditions, or even whether they remained resident on Earth at all.

But, humans are nothing if not opportunistic. About two decades ago, Ray Kurzweil pontificated on this so-called singularity, the moment computers excel beyond the capability of humans. He eventually concluded that humans could obtain immortality, and soon (2030), because technological and medical progress will improve such that robots, or “nanobots” as he calls them, will be able to repair our bodies at the cellular level. Authors Joe Gale, Amri Wandel, and Hugh Hill suggest that the moment of singularity may be closer than previously thought. When it arrives, they write, “sapiens may move from the bio-chemical realm to the electronic, or to some hybrid combination, with hardly predictable implications for humans.” If humanity can evade the outcome of drearier predictions about how it will destroy itself, but not adopt a cybernetic form, some believe that humans would continue to evolve as they have been while sharing power with AGI, somehow dividing up decisions on world affairs.

There is a large camp who do not share this enthusiasm. The late, brilliant Stephen Hawking, for example, said, “Humans, who are limited by slow biological evolution, couldn't compete, and would be superseded.” Journalist Nick Bilton asserts, “Imagine how a medical robot, originally programmed to rid cancer, could conclude that the best way to obliterate cancer is to exterminate humans who are genetically prone to the disease.” Karl Frederick Rauscher, CEO of the Global Information Infrastructure Commission (GIIC), warns “AI can compete with our brains and robots can compete with our bodies, and in many cases, can beat us handily already. And the more time that passes, the better these emerging technologies will become, while our own capabilities are expected to remain more or less the same.”

While all of this may sound like sci-fi, there is real science behind it, if some achievements remain speculative. Given humanity’s propensity to plunge headlong into technological development, with little to no consideration for its implications, the next several decades could be interesting one way or the other. Make no mistake, however, the dangers of AI are very real now, if in a far more banal way, but the future is perhaps more uncertain than ever.

***

I am a Certified Forensic Computer Examiner, Certified Crime Analyst, Certified Fraud Examiner, and Certified Financial Crimes Investigator with a Juris Doctor and a Master’s degree in history. I spent 10 years working in the New York State Division of Criminal Justice as Senior Analyst and Investigator. Today, I teach Cybersecurity, Ethical Hacking, and Digital Forensics at Softwarica College of IT and E-Commerce in Nepal. In addition, I offer training on Financial Crime Prevention and Investigation. I am also Vice President of Digi Technology in Nepal, for which I have also created its sister company in the USA, Digi Technology America, LLC. We provide technology solutions for businesses or individuals, including cybersecurity, all across the globe. I was a firefighter before I joined law enforcement and now I currently run the EALS Global Foundation non-profit that uses mobile applications and other technologies to create Early Alert Systems for natural disasters for people living in remote or poor areas.

For a view on the state-of-the-field of AI, check out the article below. For more philosophizing on sentient AI, see the one below that. Thanks for reading!