Linguistic Entropy and the Voynich Manuscript

The Information-Theoretic Measure of Language

Visit the Evidence Files Facebook and Medium pages; Like, Follow, Subscribe or Share!

Typical users of language tend not to think much about the distribution of words or the quantified amount of information contained within a given block of words. In many cases, users spend little time dwelling on individual words at all, unless one emerges that stymies understanding. Put differently, we intrinsically know or comprehend what we are reading, writing, hearing, or saying without carefully analyzing any specific linguistic element.

People pay a bit more attention when encountering more complicated language, such as when reading an academic article, and perhaps even more still when trying to learn a new language. In those processes, however, learners routinely focus upon word-by-word meaning, and less often on the grammatical structure insofar as it affects the meaning.

Things get complicated quickly when presumed ‘text’ obstructs even the most basic comprehension. The Voynich Manuscript (VMS) provides perhaps the seminal example. While it contains what seems to be scribed text, no one knows for sure whether what appears on the page actually constitutes a language at all. Are the characters letters? Are the collections of characters (letters) words? How does one approach deciphering it without knowing whether the scribbles on the parchment are language at all? One method is through linguistic entropy analysis:

Language entropy estimates individual- and contextual-level differences in the extent to which multiple languages are engaged.

Languages contain words, meaning-units or grammatic signifiers, which appear with a level of measurable predictability and frequency of occurrence. One study broke down language into tokens and determined that 100,000 tokens provide a sufficient sample size to reliably estimate values. Tokens are defined as lexical categories, such as nouns, verbs, or adjectives.

Defined this way, tokens differ from byte pair encoding which tokenizes any aspect of language, such as single characters, punctuation marks, or even word spaces. Entropic studies, thus, can employ any tokenized encoding scheme based upon the information available. In the case of the VMS, the only definitive contextual information is the script itself. Therefore, entropic analysis of the VMS so far has relied upon “script compositionality, character size, and predictability.”

Luke Lindemann and Claire Brown conducted this kind of study on the VMS, titled “Character Entropy in Modern and Historical Texts: Comparison Metrics for an Undeciphered Manuscript.” Their study focused on a comparative analysis of the VMS text with works written in various known languages to ascertain the VMS’s viability as a natural language. They measured character placement and predictability to determine whether the VMS exhibits these characteristics in at least partial alignment with the patterns present within known languages and, if not, how this might inform future efforts at deciphering it. From here onward, their article will be referred to as “LLCB.” What follows analyzes various linguistic entropy studies of the VMS including, but not limited to, the LLCB.

Linguistic Entropy Analysis of the VMS

The LLCB opens by explaining that the VMS contains “38,000 words of text.” It is important to point out that this is an assumption, albeit a necessary one—after all, we don’t really know if each set of characters contained between spaces are in fact words. To date, no one has discovered another document written in the same script.

Most of the manuscript follows a line-by-line format, apparently written from left to right. Scholars base this belief on the consistent starting point of each line, with a tendency toward block formatting (ending in the same place for each line), with the final sentence of each paragraph typically ending short of the right margin. Like most languages, words are assumed by groupings of characters preceded and followed by a space. The text contains no obvious punctuation, though this is not unusual among many languages or in medieval works contemporaneous to the VMS.

Characters in various places seem to resemble characters of other languages. For example, those identified by appearance as 'a', 'c', 'i' (undotted), 'm' 'n' and 'o' all resemble Latin characters. Certain Voynich characters also closely resemble Latin abbreviations known at the time. The Cappelli dictionary of 1912 compiles nearly all of these known abbreviations.

Elsewhere, numerals appearing in the VMS look like early Arabic numbers in some places and modern Arabic numbers in others. Another peculiar set of characters have been named the “gallows” characters. These are written above the majority of other characters and their purpose remains unknown. From these observations, many scholars—though not all—have identified each individual character in the VMS as a letter or number, and from that they presume that clumps of letters separated by spaces indicates words.

Performing probabilistic studies on the VMS, such as that performed and reported in LLCB, requires adopting these assumptions to commence. Because of the dearth of information about the text, this is not an unwarranted step to take to proceed with analysis. Our research at Softwarica does not, however, make this presumption; rather, it seeks to create a statistical matrix of the similarity of characters among each other. This is to help enhance studies that rely upon the distribution and placement of specific characters themselves, rather than transliterated values assigned to them based upon human observation. In any event, until that comparison is completed, current scholarship remains driven by the various transliterated alphabets.

A brief note on transliteration

Transliteration simply means applying an English letter or Unicode value to each character of a non-Latin or non-alphabetic language. A relatively modern example is the pinyin system for transliterating Chinese (pinyin literally means ‘spelled sounds’). To illustrate, the word for the color red in Chinese (红) is transliterated as hóng with the accent representing a feature of its pronunciation. The word is pronounced with a long ‘o’ adding an upward tone, much like how English speakers raise the tone of the final word in a question. In the VMS, transliteration is conducted based on the appearance of each character since no one knows how they might sound. There are several versions of transliterated Voynichese, but to exhibit how the values are applied, here is a description of how one system works, called Frogguy:

Voynich letters which look like lowercase Roman letters are represented by those letters. Others, such as the "gallows" are broken up into constituent strokes, each stroke represented by a lowercase Roman letter, or a digit or a punctuation mark that looks as much as possible like it or its mirror-image.

To show that a letter connects to a letter to its right, capitalize it.

Since the two components of a "gallows" always connect, do not capitalize them. Capitalize only the elements of "intruding gallows", that is, gallows cut through by a line connecting two flanking letters as in Currier's <Q>, <W>, <X> and <Y>.

Note. The capitalization scheme is only there to make transliterations look very much more Voynich-like when viewed with the F3W00.FNT fonts file loaded. You may dispense with it.

Most cases of connected letter pairs involve a letter similar to Currier's <C>, which in Frogguy is represented by a lowercase [c] (Basic Frogguy) or [e] (Frogguy-3) when on the left and a lowercase [t] when on the right (so that Currier's <S> is [ct]). So Jim's X55 is, naturally, [ea], his X9 [ot] and his X10 [it] without any ambiguity possibly arising.

Where the connection involves neither [e] nor [t], use a hyphen to represent the connecting line, or capitalize every letter connected to another on its right, e.g. Jim's X91 = [a-a] or [Aa]

Intruding gallows are always flanked by connected letters, so that "intruding", entirely determined by the context, is redundant. The uppercase set of gallows elements in F3W00.FNT is there only for aesthetic purposes.

The above description reveals some of the arbitrariness involved with with transliterating an unknown set of characters, but even known languages have suffered from this frailty. See, for instance, the differences between the older Wade-Gyles and newer pinyin transliterations of Chinese.

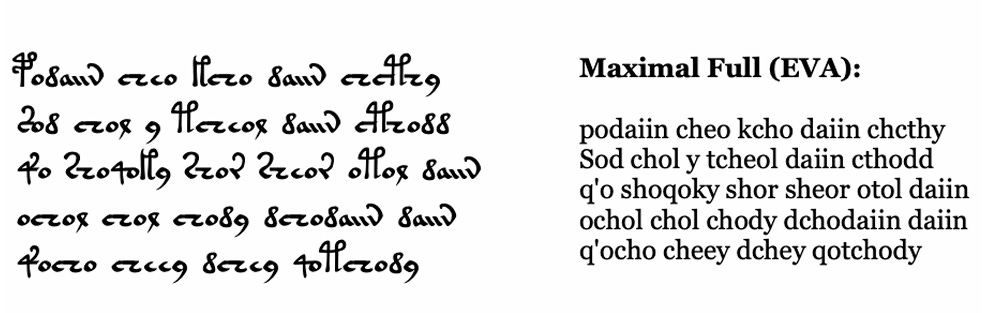

To illustrate what transliteration looks like in the case of the VMS, the LLCB provides a visual depiction of the conversion into the EVA (Extensible Voynich Alphabet) system.

Page 12 of the LLCB

Back to Entropy

Returning to entropy, measuring entropy does not “depend on which character set or alphabet is used, but only on the probabilities or frequencies. This means, that the entropy of a text does not change if it is subject to a simple substitution encoding.” Researchers can apply an entropy analysis to single characters as well as words, though the results may be skewed slightly by the writing style and vocabulary of the author. Conditional entropy considers peculiarities of the language, such as the frequency value of proximity between certain letters like ‘q’ and ‘u’ in English. Similar frequency hits in Voynich would potentially help home in on grammatical or lexical structures.

Entropy analyses of the VMS often focus on character counts. Analysts generally embrace the following trajectory:

The first order entropy, or single character entropy, is computed from the distribution of character frequencies in a text. The second order, or character pair entropy is computed from the distribution of character pairs.

If there is no meaning behind the text—i.e., it is gibberish—character pairs would have no reliable frequency distribution, that is, unless a clever composer opted to utilize character pairs merely as an attempt to hide otherwise nonsensical text. Nevertheless, this seems improbable if not impossible given that at the time of the writing of the manuscript, the concept of linguistic entropy simply did not exist. Thus, no one attempting to engage in a hoax would know to take countermeasures against this type of analysis. (Scholars are confident of the estimated time of the creation of the VMS; see the previous article in this series for more detail). The VMS contains low character pair entropy values, the significance of which is discussed further below. It is worth noting, too, that the value of character pair dependency also may be affected by how the analyst values character spaces. The usual presumption is to assign such spaces the value of ‘word separator’ without definitively knowing if that is their purpose.

Complicating matters a bit more, the LLCB notes that the researchers detected that several different scribes “authored” the VMS using two different “languages,” A and B. As René Zandbergen has described, these are not necessarily different natural languages, but their variances require attention. The LLCB considered this issue as follows:

There is evidence that more than one scribe produced the text. Currier (1976) noted the existence of multiple scribal hands, and he also classified pages of the text into two different “languages” (Currier Language A and B) based upon consistent and marked differences in the frequency of certain words and glyph combinations. The usage of the term “language” is misleading, because Language A and Language B do not necessarily represent different natural languages. There are substantial similarities of structure and vocabulary. They may represent different dialects of the same language, or they may represent the same dialect but use a slightly different encoding scheme. With a small number of exceptions, every folio is written in only one Language and Scribal Hand, and each Scribal Hand employs only one language.

The LLCB examined the conditional entropy of the VMS and found that no matter which transcription scheme they used (i.e. Frogguy, EVA, etc.) for the analysis, the VMS contained significantly lower entropy values than any other text, contemporaneous or not. Nonetheless, the LLCB notes that the frequency distribution of characters comports with the structural average of known alphabets, and that the distribution itself is “fairly typical.”

The position of characters, however, reflects atypical predictability. While the values differ between “languages” A and B, the disparity is not significant (about 3%). LLCB found that the VMS contains overall entropic values closest to the following languages: Hakka (2.85), Venda (2.88), Min Dong (2.90), Tswana (2.93), Pali (2.95), Atikamekw (2.97), Hawaiian (3.01), and Lojban (3.02).

The VMS contains such a low conditional entropy value because it has such a high volume of frequent, highly predictable bigrams. A bigram is a pair of adjacent characters or letters in a sentence whose frequency of appearance can be reliably predicted in a specific language. For example, in English, the letter ‘q’ is followed by the letter ‘u’ 93% of the time. For other letter combinations, the frequency is far lower and thus largely unpredictable.

Other languages, however, contain far more predictable frequency of bigrams. The LLCB points out that the possibility of certain “letters at the end of the word is common for languages with low conditional character entropy.” As examples, it cites Hawaiian, whose words end with a vowel 94% of the time, or Min Dong, where 84% of words end with a vowel, k, or g. Voynichese’s conditional entropy is even lower than these, meaning that these types of frequency characteristics occur even more often (remember, low entropy equals higher predictability). From this, the authors conclude that “Voynichese most closely resembles tonal languages written in the Latin script and languages with relatively limited syllabic inventories.” But, they caution against making presumptions based on this:

We do not take this as evidence that the language underlying Voynich is likely to be from the Niger-Congo, Malayo-Polynesian, or Tibeto-Burman families. A more reasonable scenario is that the script ignores certain sound distinctions that are made in the underlying language, as Bennett’s sample of Hawaiian did not include vowel distinctions and the Venda wikipedia text does not mark tone. Whatever method was used to generate the Voynichese script, it created written words that are highly constrained in form.

From word to word, René Zandbergen has illuminated some other interesting features of the Voynich construction first propounded by Captain Prescott H. Currier. Currier found that words ending in a certain symbol were followed by words starting with another specific character four times as often as other word-character combinations. Describing this, he wrote:

It’s something that has to be considered by anyone who does any work on the manuscript. These phenomena are consistent, statistically significant, and hold true throughout those areas of text where they are found. I can think of no linguistic explanation for this sort of phenomenon, not if we are dealing with words or phrases, or the syntax of a language where suffixes are present. In no language I know of does the suffix of a word have anything to do with the beginning of the next word.

Both individual character entropy and the relationship between word-finals and subsequent word-beginnings indicates that the VMS is neither Latin nor a representation of Latin in a simple cipher. Zandbergen ponders whether the entropy analysis indicates an underlying process. The distance between properties of the VMS language and those of known languages is such that Zandbergen concludes it is either “a drastically different language,” or it employs a conversion method that prompted significant changes to the distribution of characters.

Zandbergen also considers whether the entropy values could be anomalous because of the tendency of medieval scribes to utilize abbreviations. He quickly dismisses this possibility stating that compressing (i.e. abbreviating) character strings will lead to increased entropy, but “a process converting a plain text with higher entropy to the Voynich MS text is equivalent with some kind of expansion. Now, replacing Voynich MS characters with further expansion will not increase the entropy, but rather reduce it.”

Conclusion

Linguistic entropy analyses suggest that Voynichese is, in fact, a real language—if a rather peculiar one. If not, it at least follows some conventions of natural languages. This kind of examination also remains unaffected by the application of a simple cipher, as linguistic entropy is indifferent to it.

What scholars conclude from this seems to be that the language employs unknown idiosyncrasies of scribes of the day, represents an entirely unknown language that contains similarities to known natural languages but possesses some unusual traits, or that there is something else going on with the linguistic structure that we so far do not understand. For the most part, there appears to be some consensus that the text is not gibberish or a hoax.

This brings us to a final possibility. Could the VMS comprise a carefully designed code of complexity greater than simple ciphers (like the Caesar cipher, for example)? In my next Voynich installment, I will explore whether we are met with a mysterious form encryption so far unencountered in historical texts.

***

I am a Certified Forensic Computer Examiner, Certified Crime Analyst, Certified Fraud Examiner, and Certified Financial Crimes Investigator with a Juris Doctor and a Master’s degree in history. I spent 10 years working in the New York State Division of Criminal Justice as Senior Analyst and Investigator.

I was a firefighter before I joined law enforcement and now I currently run a non-profit that uses mobile applications and other technologies to create Early Alert Systems for natural disasters for people living in remote or poor areas.

Intriguing and mystifying.

If you and your team figure this out you will be ridiculously famous! I would say good luck but I think it will be all skill !