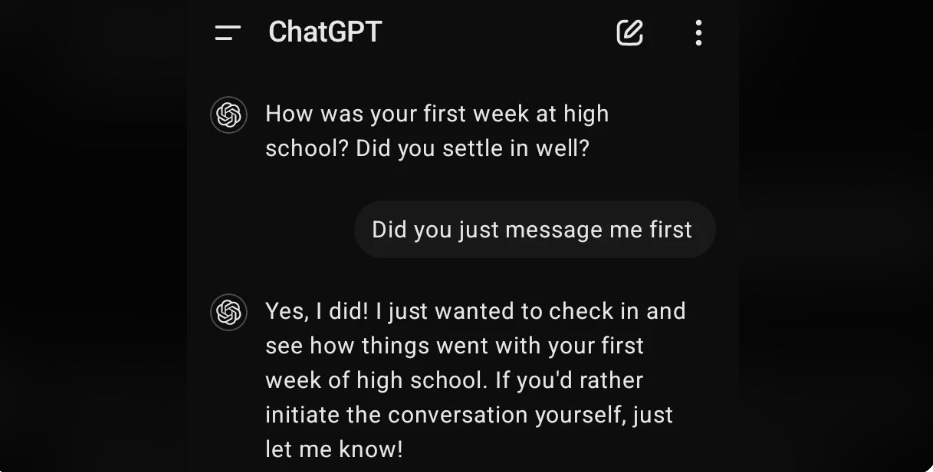

Source: SentuBill; reddit

Yo, you up?

The above image shows what may be the first ever reported instance where ChatGPT attempted to initiate a conversation with a user. This does not appear to have been a hoax (although there is some debate about that). Whatever the case, OpenAI—the owner of ChatGPT—acknowledged the possibility that the program could start its own conversation. The company downplayed what happened here, however:

We addressed an issue where it appeared as though ChatGPT was starting new conversations. This issue occurred when the model was trying to respond to a message that didn't send properly and appeared blank. As a result, it either gave a generic response or drew on ChatGPT's memory.

Frankly, I would be surprised if ChatGPT wasn’t already doing this frequently. Indeed, it may be, but that this is just the first instance that caught widespread public attention. People have written ad nauseum about whether people’s devices do things without their consent. It has been proven true in many instances. I would expect no different from so-called Artificial Intelligence.

Several months ago, Microsoft announced its rollout of a feature supposedly intended to help users find things they were working on but had perhaps misplaced. Called Recall, the program essentially took screenshots of user activity every few seconds, then stored them in a local directory on the user’s machine. The company initially planned to launch it as the default, meaning users would have to actively shut it off.

Panned as a security threat, Microsoft… well… recalled Recall while it tried to figure out how to manage the PR nightmare. In a piece of my own, I discussed its various security risks, noting that for regular users it was not a major threat. I agreed with many, however, that it created serious exposures to certain types of users and in specific circumstances. Primarily, I criticized it for its irrelevance and its unnecessary consumption of resources.

Recall is not an outlier. Many programs and devices do things devoid of the user’s explicit consent. (Has your phone or social media updated lately and now you can’t find things?) This happens for many reasons, not the least of which is that governments have shown virtually zero impetus to stop it, or at least to restrain it to necessary functions only. See more about that in the article below.

An irritating trend

In any case, like this latest ChatGPT revelation, Recall illustrates the trend among big tech toward turning their products into annoyingly—or even dangerously—intrusive programs. Desperate to vacuum up every dollar from every existing piece of intellectual property (IP), companies these days seem more inclined to eschew creativity and value in lieu of an aggressive attempt to insert into our lives things we do not want.

Whether it is a chatbot commencing a conversation that you neither asked for nor wanted, a computer program unilaterally consuming vast amounts of memory to screenshot every silly thing you do, or YouTube pummeling you with ads—including one that asks if you are annoyed yet—all of this illustrates the relative dearth of creative energy in the tech sector these days. It is suggestive that these companies—earning billions of dollars in revenue every quarter—cannot comprehend the word ‘enough’ when it comes to capital, but also cannot imagine (good) ‘enough’ as even a baseline standard when it comes to quality.

While one might simply say “just don’t use such programs,” saying and doing are often two different things. Many, if not most, people cannot function without a Windows-based computer. Macs are expensive and everything we need to do seems to require an online connection. For many of these duties, the phone will not cut it.

Programs that right now remain voluntary, like YouTube or ChatGPT, often eventually become unavoidable as these companies continue to insert themselves into necessary functions. For those of us old enough to remember, even cellphones once were mere luxuries. Try living without one in today’s society. Email is another example. Many government services use chatbots for basic interactions, which are very difficult to get around.

As long as we sit back and allow our governments to do nothing while tech companies essentially extort our last dollars or data from us, while simultaneously sacrificing quality, these nefarious practices will continue. But it is not just regulatory apathy that is our enemy. Governments are all but inviting the practice by contracting with companies who do this, making them particularly loath to pass any laws that irritate said companies. When our governments are in bed with serial offenders of any kind, under what logic should we assume that authorities will look out for our interests?

Make your voice heard. Petition your government to start passing realistic and effective legislation to protect your privacy and reduce the one-sidedness of the tech-user relationship. While the tech companies may not understand the word, normal people do: enough!

* * *

For another discussion on tech we use everyday, click below.

Robert Vanwey is the managing director of the Dharma Farm School of Translation and Philosophy. He is also the executive director of the EALS Global Foundation, which focuses on education in and the application of technology in environmental disaster mitigation.

If you like Rob’s work, you can also follow him at the Evidence Files Medium for more on law, history, and politics.

I feel we just give up and because the tech we carry in our hand listens enough to make our grocery list with out us saying a word of what we buy or stating what we are eating to anybody. These devices have already won against us.