Why Private Capital is Bad for the Future of Tech

Quantum Computing is going the way of other sectors

Example of a quantum cryptosystem layout; (Public Domain)

When any societal good depends upon private investment not just for its implementation, but also its research and development, history has shown that the outcome tends to be negative, both in the long and short terms. Quantum computing’s progression so far remains heavily dependent upon private equity, suggesting that its capacity to “change the world” will only come to fruition to the benefit of a very small segment of society. In other words, it might change the world, but how?

One can look at the automotive and streetcar industries in the United States as an early example of the perils of private equity’s control over progress.

Private capital derailed American public transportation

Rather than gravitating toward robust public transportation—an ostensibly lower-return commodity—private interests instead focused on developing the personal automobile and its surrounding infrastructure. This helped bring the government onboard in the same pursuit. In a rush to profit from the budding industry, private interests sought to capitalize on technological gains before they were sufficiently developed.

This crippled transportation sectors that over the long-term would have led to a vastly better outcome for the environment and for individuals’ quality of life. Instead, the United States moved toward an interstate system that prioritized the passenger car, leaving most cities and virtually all rural areas bereft of good public transportation. Furthermore, public transport in the US came to be viewed as a form of “social welfare,” impeding its adoption even more.

Traffic jam in Los Angeles, 1953 (public domain)

According to Market Watch, Americans in 2024 need to make $100,000 in income to afford an average-priced new car if following a sensible budget allocation (meaning that automobile expenses stay within 10% of gross annual income). This puts the price of new cars out of reach for 60% of US households and 82% of individuals. For this reason, the average age of vehicles on the road is the highest it has ever been.

As a car ages, its negative impact on the environment worsens. In nearly all countries, emission standards are lower than those for new cars, or entirely nonexistent. In countries with higher requirements for older vehicles, those that fail to comply are often sold to countries without such stringent regulations, thereby keeping them in the pollution rotation somewhere. Used cars also tend to be less mechanically sound, contributing to higher injury and death rates in accidents.

In 2022, an analysis by Consumer Affairs showed that 8.3% of households had no access to a car at all, and 14% did not own or lease one (some used ride-sharing or borrowed vehicles). The consequence of excessively expensive cars combined with poor public transportation has led to 5.7% of the population lacking sufficient access to transportation for even basic day-to-day functions.

Cars became so expensive for several reasons. First, changes in how emissions standards are imposed led to the tendency of automakers to produce bigger, pricier models. Before, the baseline miles-per-gallon was used. Today, the metric is a vehicle’s footprint, or “how well a model performs for its size—rather than the average for all the vehicles a company sells.”

Second, like many technology devices, companies have taken active measures to prevent consumers from repairing their own vehicles. Characterized as the “right to repair,” many have protested the fact that proprietary software and remote shutdown mechanisms forcibly prohibit consumers from making their own repairs to vehicles. This forces them to defer to manufacture-approved shops to conduct any necessary fix, no matter how minor, often at great cost. So far, consumers are largely losing this fight because of the imbalance of power with big business.

A third reason has to do with disruptions to the supply chain. During the COVID pandemic, this phrase became a household slogan to the extent that it virtually lacks meaning. Nonetheless, it describes any inhibition to the movement of critical components throughout the manufacturing process. Because most consumer items are crafted from parts made across the globe, the possibility of a crucial component suddenly becoming temporarily unavailable is high, as a result of issues like severe storms, accident, war, or… well, pandemics.

While the costs continue to rise, automakers also profit from their customers by selling all the data associated with who they are and how they use their products. This actually increases consumer costs because the data is typically sold to insurance companies who use it to raise rates. Higher repair costs also raise insurance rates for consumers.

Because profit margins ultimately rule the day when it comes to consumer cost, any and all of these issues provide both a real reason and an available fabricated narrative to keep prices—and profits—exorbitantly high.

What happened to transportation is happening to tech

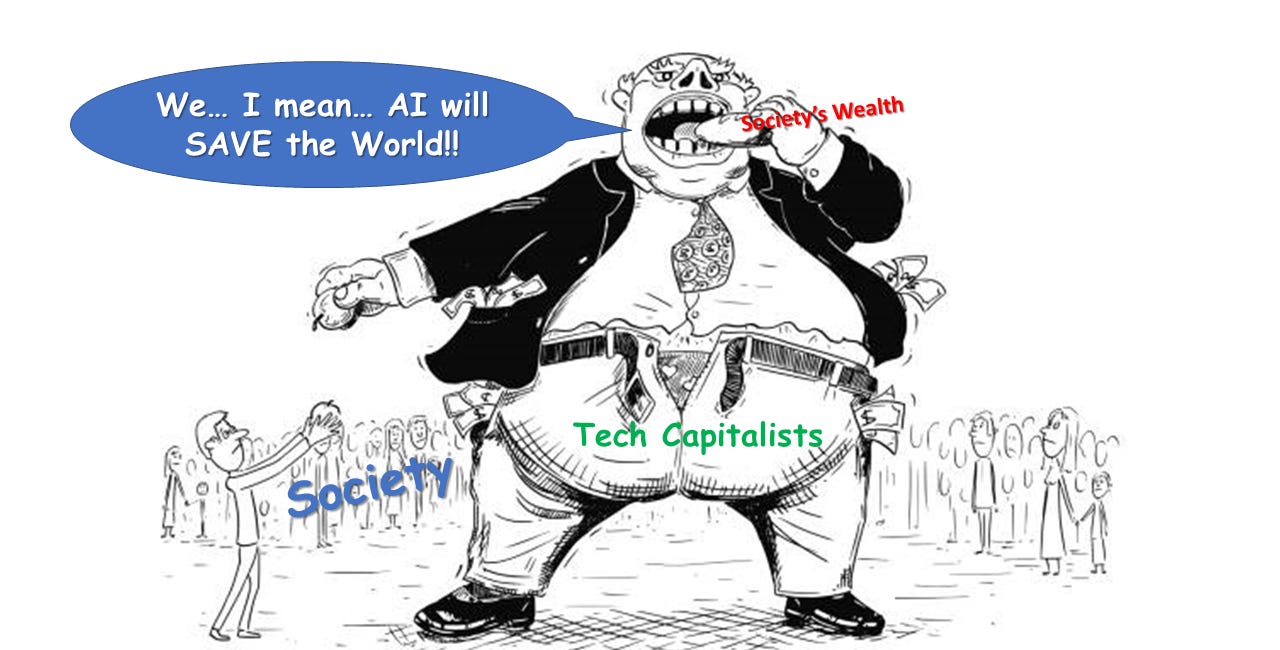

Big tech is following the same paradigm, injecting huge amounts of money into advances such as AI and quantum computing. The result has so far not been great. AI, an extraordinary useful tool even in its current manifestation, is instead being turned into a tool of decline.

Its pervasion into virtually everything is causing massive environmental damage for little to no gain—and more often a loss—in quality of services, but extraordinary gains for investors. Moreover, AI is largely responsible for the increasingly sinister hellscape that the internet has become, encapsulated in the Dead Internet Theory.

Credit: Reuters

Many services that for decades were included in the cost of other services or platforms (such as in part of an operating system) are slowly converting to pay-to-use services. AI is expensive, so its addition to simple applications elevates the cost to run, which is passed along to consumers.

The road to developing quantum computing is beset with all the same mistakes, or arguably purposeful capital-driven malevolence. In this case, the result could be poorly developed quantum computers that create a brief bubble, then fail when those financially supporting them finally see through the hype.

Alternatively, what could one day be an extremely helpful technology will take decades longer to come to some useful fruition, if it ever does, as investors pull out and research diminishes for lack of support.

Finally, and most ominously, even if quantum computing reaches its zenith of utility, its chief benefits will become increasingly unavailable to large numbers of people or reserved only for the wealthy in the first place. Worse, it could become weaponized by the powerful against the rest of society much like the internet has generally.

Bloch sphere, a geometrical representation of a two-level quantum system. By Smite-Meister, CC BY-SA 3.0.

How quantum computing began

In 1984, Charles H. Bennett of IBM Research labs and Gilles Brassard of the University of Montreal presented at the International Conference of Systems & Signal Processing in Bangalore, India. The paper they produced on the heels of the conference opened with a statement that would lead to what now might be characterized as another overhype of technological progress, quantum computing. They wrote:

When elementary quantum systems, such as polarized photons, are used to transmit digital information, the uncertainty principle gives rise to novel cryptographic phenomena unachievable with traditional transmission media, e.g. a communications channel on which it is impossible in principle to eavesdrop without a high probability of disturbing the transmission in such a way as to be detected.

What followed were various papers that provided a theoretical basis for how engineers could capitalize on the superposition principle to create veritable super supercomputers.

In 1994, Peter Shor developed a factorization algorithm, a key mathematical process for breaking high-level encryption that with the computing capability offered by quantum superposition would render even RSA encryption no longer viable. But that is only if it can be applied to quantum computing and set against encryption on a large scale.

Starting in 1998, researchers have been developing quantum computers in the real world, exhibiting proof of concept. Isaac L. Chuang, Neil Gershenfeld, and Mark Kubinec developed a two-qubit computer that exhibited the possibility of building a fully functional quantum computer that seemed to confirm the theories.

In 2019, a debate emerged when Google claimed to have conducted a calculation with its 53-qubit quantum computer that would take a normal supercomputer “10,000 years” to perform. IBM quickly published a retort that the actual time necessary for a “classical system” to perform the function was 2.5 days. Quite the difference.

The reality is that Google’s claim and IBM’s response were both inconsequential. Google’s experiment relied on perfect conditions for its quantum computer—a nearly impossible state—and compared it to what essentially amounted to a fictitious system, meaning one probably nobody would actually use in the real world. Moreover, the entire test was a mere simulation. In short, it was a competition between two toys, not two tools.

Researchers published a paper in August of 2024, indicating the discovery of a new superconductor material. Superconductor materials are critical to the function of quantum computers because such systems must be kept extremely cold to avoid the affect of “noise,” or environmental conditions that can disrupt the function of qubits, a condition called decoherence. Decoherence leads to errors. More “noise” contributes to higher rates of decoherence eventually causing enough errors to make quantum functions completely unreliable.

As materials are made colder, however, their conductivity suffers which inhibits function. Therefore, the discovery of superconductors is critical for quantum computers to operate without error because they maintain their requisite conductivity despite extremely low temperatures.

So far, this all sounds like progress with just a few fits and starts. Right?

The Problem

When technological advances depend upon private equity, the timetable inevitably shortens by which the technology must turn a profit. Historically, this leads to a predictable path that ends in a “bubble” that ultimately pops, leading to catastrophic consequences. What arises out of the ashes almost always leads to poor returns for the majority of investors and the public at large.

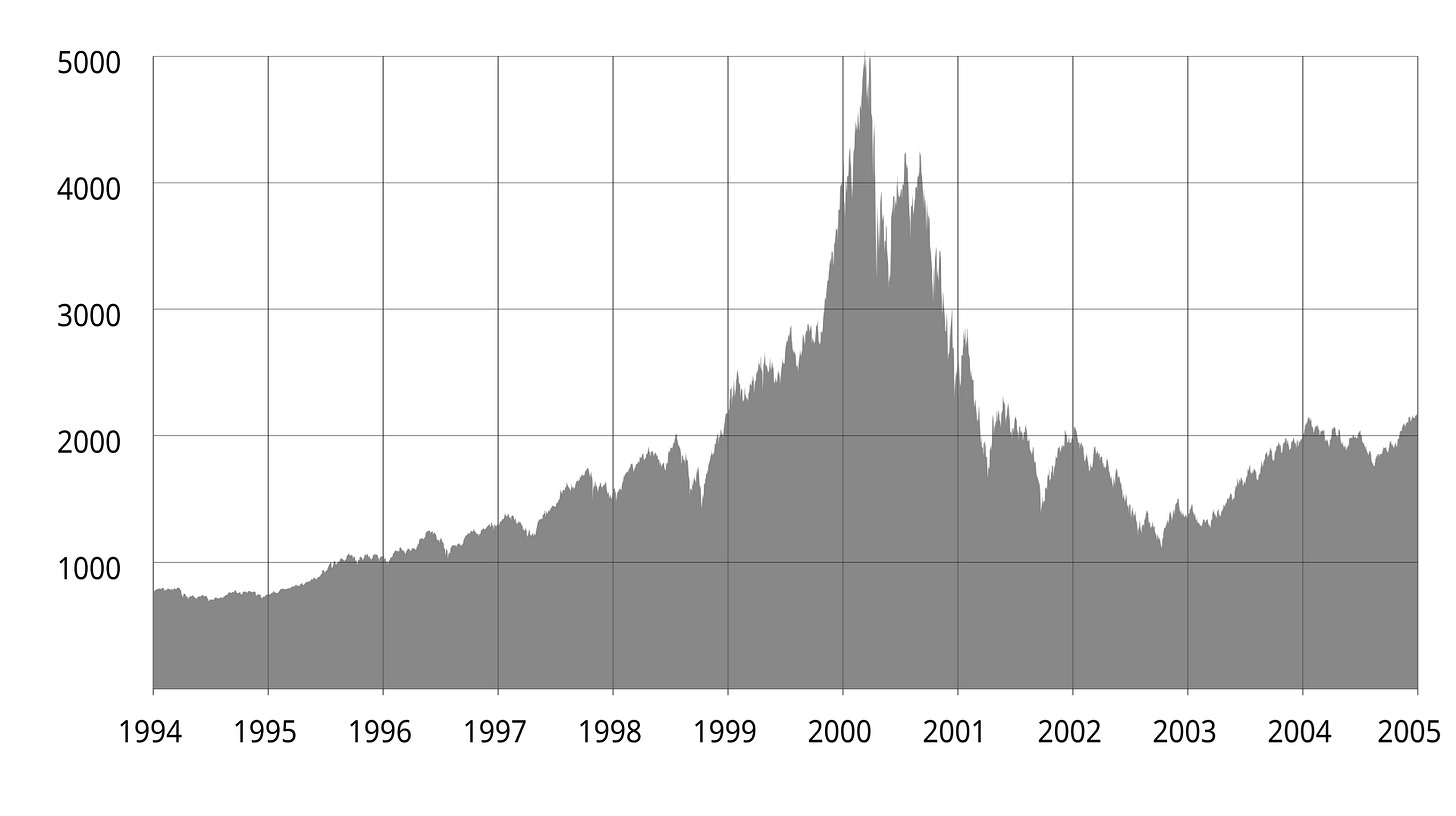

From about 1995 to 2000, investors engaged in a frenzy of speculative investing in internet companies, a situation that later became known as the “dot-com bubble.” In an effort to see constantly rising profits, many aspects of the internet that people have come to hate were born. Examples include the advent of internet advertising and the concentration of control of most platforms into the hands of the few wealthiest investors or groups. Large numbers of start-ups were vacuumed up or destroyed, leaving small numbers of people to greatly prosper while most went broke.

The NASDAQ Composite index spiked in 2000 and then fell sharply as a result of the dot-com bubble. (Public Domain)

Harlan Lebo, a cultural historian at the Center for the Digital Future at the USC Annenberg School for Communication and Journalism, summarized it as follows:

Much of the “growth” of new internet companies was a façade, an industry fed by novelty and perceived investment potential – but in most cases without planning or financial evidence to back up the talk. Hard-boiled financiers threw common sense out the window, investing in companies that, with even a moment of consideration, would have been viewed as the most absurd folly.

The few survivors of the dot-com bubble eventually took control of the modern internet.

By 2024, just two companies—Apple and Google—capture 85% of the internet’s market share, and 99% of browser use. Google controls over 84% of internet search capability, from which it abundantly profits. The two companies also provide about 90% of all email services. Amazon and Microsoft own 50% of all cloud-based services. In short, the internet has become little more than a cash register for just a few companies, not the free “land of ideas” it once was thought it would become. And users don’t like it.

Like the internet, innovation itself is also backsliding. The Harvard Business Review reported that innovation in the United States started slowing in the 1970s, reaching a 100-year low in 2019. It found the reason to be primarily a split between “corporate and academic science.” Put simply, corporations have invested less-and-less in scientific research, choosing to apply venture capital into the production of applications instead. This has led to the premature release of technologies, which is why things like AI and the internet largely suck as widely accessible tools.

Relatedly, the MIT Technology Review noted that “proprietary information technology in the hands of large firms that dominate their industries” remains out of reach of innovators who do not work for those firms. Even when a small firm manages to “disrupt” the industry with a revolutionary idea or product, large companies effectively smother it through their overwhelmingly large customer shares and available capital. The small companies either fold or sell off their assets to these larger companies who can then completely quash them, or incorporate them into their own asset structure (the latter of which usually leads to enshittification).

Overhype

In nearly all cases where private equity gets behind an idea, along comes an over-abundance of hype. This is necessary to ensnare investors by diminishing their ability to critically calculate the veracity of claims. In the 1990s, investors were swayed by announcements about how the new internet would “change the world.” As Brian McCullough explained:

It became a joke that the dot-coms that started out promising a grand vision of a more efficient way of doing business were — almost to a company — unprofitable.

And in retrospect, the impact was inevitable:

By 2002, 100 million individual investors had lost $5 trillion in the stock market.

Fast forward to the 2020s. Marc Andreessen, one of the investors who managed to succeed through the dot-com bubble, is hawking the same nonsense about AI. He claims it will be “a way to make everything we care about better” and that it “will save the world.” Sound familiar?

Venture Capitalist Makes a Strong Argument for Regulating AI (without meaning to)

Visit the Evidence Files Facebook and Medium pages; Like, Follow, Subscribe or Share!

With quantum computing, the situation is more of the same. Look at this graphic from a search on YouTube:

These are not randos on the internet. The four videos in that graphic alone received nearly 12.1 million views. Look at the underlying trend: quantum computing is revolutionizing the world essentially “now.” This seems rather peculiar when you consider that just days ago, on November 14, Forbes published the following:

The quantum security market has had a gear shift on it. Initially, the market was robust in fifth gear because we were afraid quantum computers were going to destroy encryption as we know it.

Now, that growth has slowed a bit, as the reality of a cryptologically relevant quantum computer is still at least an estimated five years away.

That was written by John Prisco, Security CEO & founder of Safe Quantum Inc., whose company works “with data-driven companies to develop and deploy quantum-safe technologies.”

Indeed, evidence suggests that quantum computing is already facing the downward trajectory of the hype bubble. According to the State of Quantum 2024 Report, investments in quantum companies are down by 50% from 2023 in the US. The report views this as a natural progression of investment strategy, but it may simply be that reality is catching up.

The notion of the turning of the corner being “just down the road” is not new. Companies have been investing in quantum computer startups since at least 2012. In 2018, Yale’s Quantum Institute reported:

Some VC investors are betting on a breakthrough that brings general-purpose quantum computers to fruition in five or ten years. Others are banking on making just enough progress for another firm to buy them out. Many also hope scientists can find applications for relatively small, imperfect quantum computers, which might emerge sooner. These would be limited to tackling specific questions, such as simulating a reaction in quantum chemistry or optimizing a financial model. They might not perform better than a classical computer that has unlimited computing resources, but they could still create marketable products.

Now six years later, none of this has manifested. No quantum computer has been able to perform any practical application that brings it anywhere near monetization or significant progress. “Relatively small, imperfect” versions have also not amounted to anything useful so far.

In a survey of people in various fields and those who might one day use quantum computing in their business, respondents were asked what they expect from it with respect to their organizations or disciplines. The largest number of respondents answered: “solve previously unsolvable problems.”

Source: Quera.com

There is a curious irony here. The stakeholders in a technology that they have been convinced will solve previously unsolvable problems is currently a largely unsolvable problem. Moreover, just as AI once was purported to be the next thing to “save the world,” quantum computing is alleged to deliver the same. Yet, in both cases, the promised delivery date keeps stretching ever further into the future.

Unchecked optimism continues to propagate a futuristic fantasy. Just about five months ago, physicist Michio Kaku proclaimed to an audience in the Beacon Theater:

Now we are entering the fourth great era of scientific innovation and wealth generation: artificial intelligence and quantum computers.

Even if he is correct, if these advances are driven by private capital, the wealth generation will be limited to a rare few. Yes, eventually people will (probably) benefit from some version of the production. But like the current day’s internet, it will be a monetized, flawed commodity that may cause as much disruption as gain, if not more.

What can be done?

The problem does not lie in whether these advances in technology can contribute to improvements for society. Both the internet and AI already have done so, but with severe caveats. Where the concern lies is in how these technologies are applied, and under what motivation.

To put it simply, it might indeed be possible to deploy AI or quantum computing toward finding solutions to climate change or stopping exorbitant CO2 emissions, for example. Unfortunately, unless the process produces profit, it will simply never happen… at least under the current paradigm.

Without breaking apart the monopolies that control all the major technological platforms, future development will only occur if they can profit from it. True innovation will remain a plaything of vast corporations, and the benefits will only trickle down in the form of a license for the public to use it rather than own it. This also means a large part of the global population will forever lack any access whatsoever and wealth inequality will continue to rise.

Disconcertingly, trust-busting alone will not be enough, no matter how impossible succeeding in even that itself seems. Powerful tools that humankind has repeatedly shown it is not ready for provide extraordinary weapons for the worst actors. These include evil leaders of state as well as avaricious venture capitalists and narcissistic billionaires. But the problem is still deeper than that.

As AI’s deployment has shown, unintended consequences inevitably emerge when the public’s well-being falls far down the priority scale. Heaving dangerous, understudied technologies on society rarely leads to net-positives for humankind, but profiteering all but requires it. The problems are readily notable in the trajectories of AI, the internet, platform services, satellite technology, and many others.

Most countries have regulatory agencies that require extensive testing of medicine, food, and other items that can impact public health. Unless governments start treating technology the same way, humanity will become mired in ever graver danger. The next decade may be the turning point for the species in a way that few evolutionary biologists ever anticipated.

It might already be too late to heed the warnings of philosophers and other visionaries, such as George Orwell, to avoid a tumultuous, unpleasant future. Nonetheless, that dystopian outlook might just be the best case scenario we have left.

* * *

Read about the serious problem with the rapid launching of copious numbers of satellites below.

Diagnosis: Kessler Syndrome

The progress of human utilization of Earth’s orbital space has rapidly accelerated since the first moon landing in 1969. Thousands of satellites have been launched to facilitate the Global Positioning System (GPS), communication, weather observation and modelling,

I’m not persuaded by the parallel between the deleterious effects of automotive transport and the potential for inequity from computer developments. I agree that with hindsight cars were a disaster for a host of reasons not just environmental degradation. For example the waste of land for roads is irksome to me. But where I disagree is your suggestion that investment should be channeled for public benefit. That means government and governments do not have the ability to see what is needed.