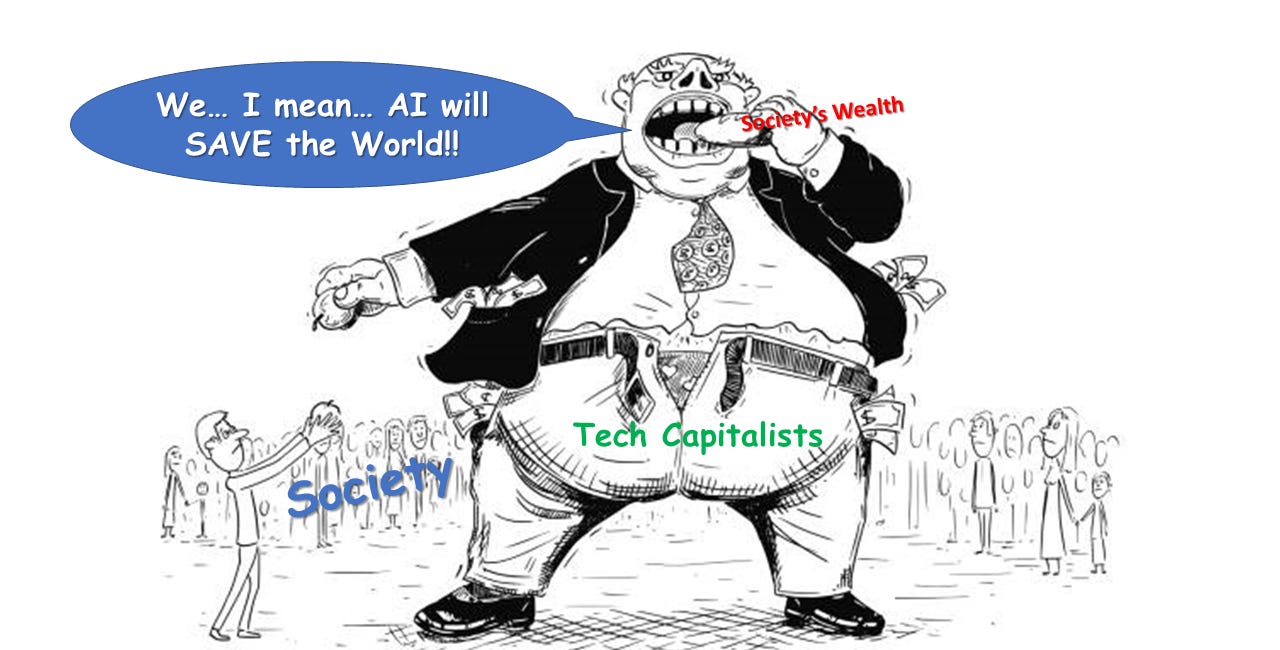

Image created by author.

To Think Like a Human

NeuroAI Scholar Kyle Daruwalla, of the Cold Spring Harbor Laboratory, recently described the path by which he has made a purported breakthrough in the way Artificial Intelligence (AI) processes information:

There have been theories in neuroscience of how working memory circuits could help facilitate learning. But there isn’t something as concrete as our rule that actually ties these two together. And so that was one of the nice things we stumbled into here. The theory led out to a rule where adjusting each synapse individually necessitated this working memory sitting alongside it.

At issue was overcoming the “billions and billions of training examples” it requires for an AI to perform up to even marginally acceptable standards. To eliminate or at least reduce that complication, Daruwalla focused on enhancing the efficiency of moving and processing data.

He looked at the way human brains do it as a model. But there is a significant problem with this: while humans are capable of swiftly processing information to make on-the-fly decisions, they do so at the expense of the totality of data. More importantly, the methodology by which humans think works in direct contrast to the elementary principles of computer design.

In my piece on whether AI will ever become conscious, I discussed the concept of transperspectivism. As a reminder:

Transperspectivism is the belief that there’s no single perspective on reality, which means that each individual has their view and nobody’s suitable to be considered more right or more proper.

To quickly summarize, humans simply cannot comprehend the vast amount of data comprising their environment, so they “rely on a social reality construct to manage day-to-day survival.” By this, they are able to take intellectual shortcuts to manage their affairs, shortcuts built on the work of others that they then refine down to meet the needs of a given moment.

As a result, because each person’s Cliff’s noted version of comprehension is necessarily different, influenced by their own unique experience, no one can lay claim to holding an objective view of reality. In other words, we all survive within a subjective existence because every one of our lives have been different and our brains thus perceive the world differently.

The reason why this is problematic as a basis for AI thinking like a human is many-fold.

First, and most obvious, AI does not have lived experiences. As of now, anyway, AI comprises mere programs set within stationary machines. Perhaps if one day AI is embodied it will enjoy its own life events, but for a very long time—maybe forever—that learned information will be constrained to the confines of its own existence, routinely shorter even than a single human lifetime.

Whereas, humans benefit from the length of their own existence as well as the evolutionary traits ingrained within them from eons of lives that came before. Whether such a genetic lineage could ever be imputed upon an AI remains to be seen.

Second, humans generally do not grapple with raw data, but AI necessarily does. As Beau Lotto has suggested, because of their limitations with processing massive amounts of information, humans operate primarily on the ‘utility’ of data and ignore its raw form. This is an evolutionary trait meant to keep people alive by allowing them to react quickly and (reasonably) correctly without taking the time to analyze the entirety of a situation.

AI, however, cannot function this way. While it may be possible to maximize the efficiency with which AI prioritizes raw data, it does not seem probable that it could ever adopt the human ability to ignore it altogether. Thus, from this alone, the notion of an AI “thinking like a human” seems unlikely.

Third, even if that problem is somehow conquerable, it suggests then that AI would never resolve the issue of inaccuracy it already suffers in abundance, such as in the form of ‘hallucinations’ by chatbots, to name just one. Put another way, AI already struggles with accuracy despite training on unimaginably large datasets. Will reducing those datasets by ignoring certain portions somehow improve accuracy? It seems not.

As noted, humans benefit from a reduction of input processing through the supplement of relying upon lived and evolved experience. For AI to procure the same benefit means defeating an entirely new set of obstacles. In short, it would require programming AI with some form of intuition.

It's a matter of perception.

Even if it becomes possible to enable some level of instinct within an AI, it must still be able to produce outputs that are completely correct. The reason is because humans expect them to. An absolutely fundamental difference between the outputs of humans and AI is our perception of each’s capability. Let me provide a hypothetical to illustrate.

If a human asks another what year Shakespeare wrote Hamlet, the value (i.e. the belief in its veracity or reliability) of the response would depend upon a number of extraneous and potentially subjective factors, factors we prioritize based on our personal experience and beliefs. These might include: 1) is the responder a professor of literature; 2) is the responder a history buff; 3) do I know this person well enough to feel confident in their intellect or knowledge? The answer to those internal inquiries or others like them, of which most people contemplate mostly subconsciously, significantly influences the inclination to accept or reject the answer.

With machines, it is different. There is an inherent belief that machines—computers, CCTV, audio recordings, etc.—are objectively accurate. Before the advent of present-day AI programs that spew such farcical nonsense that many have come to distrust them, people largely accepted machine observations or calculations as ‘true’ because these machines did little more than connect inputs A and B to reach conclusion C. Reliability was the essence of their invention.

Virtually no one performed a manual calculation to assure that their TI-85 correctly produced the square root of something, for instance. The answer was simply accepted as true. And, despite its well-publicized flaws, very large numbers of people still rely on AI with the same level of confidence.

I enjoyed the experience of the moment when some people learned how mistaken this belief in machine objectivity is in a class held long before ChatGPT and its ilk proliferated. The US Secret Service was teaching us how to conduct advanced video forensics. In one of our sessions, the instructor played CCTV-captured footage of a crime. Afterward, he revealed how the camera—although it displayed what appeared to be a crisp, high-resolution image—nonetheless washed out the entire very large embroidered logo on the perpetrator’s shirt, and projected a very different—and incorrect—color of the shirt itself. The reasons had to do with the lighting and position of both the camera and the subject. It was shocking because the outputted image looked so real, thereby giving us no reason to doubt its veracity.

Chatbots and other AI engage in this same ruse today, but to billions of people in an even more believable way. And they are growing more convincing by the day. It is for this reason that (certain) politicians post deepfake content of themselves to bolster their image or of their opponents to create controversy where there is none, or scammers use AI-generated voices to dupe their victims. People have been falsely arrested, discriminated against, suffered medical harm, and endured innumerable other injuries because users of AI have uncritically relied on its outputs. Developing more convincing AI will only exacerbate the issue.

The developer and others like him in the article that inspired this piece are trying to make AI better. I get that. What they would likely argue is that they are seeking to capitalize on the attributes of human thinking methodology while eschewing the detriments. From a conceptual perspective, I applaud their efforts. But the insertion of AI into nearly all aspects of our technological ecosystem has not proceeded with pragmatism. What has been hefted upon society instead is a premature, underdeveloped monstrosity whose primary capability so far has been characterized by infecting and sickening everything it touches.

AI has been designed to emulate thinking like a human, while adopting its worst elements—racism, misogyny, profiteering, lying—rather than its best. As long as people hold onto the belief that machines produce outputs unadulterated by human deficiencies like bias, malevolence, or mistake, then far too many will accept as true any number of perversions.

Rather than spend an abundance of resources on correcting the efficacy problem, it seems most venture capitalists care more for improving AI’s persuasiveness to increase its capacity for monetization. If that trend continues, the AI that the public uses will function hardly differently than a suave conman, poisoning society with whatever villainy its profiteers purposely or inadvertently spread.

It is not at all clear to me that we would even want AI to think like a human, either from a content perspective or functionally. It seems there are considerable dangers to providing machines humanlike ‘mental’ abilities, already manifested in less humanlike machines such as today’s LLMs. These include incorporating the biases and darker sides of humans that are only elevated by AI’s certain superior abilities.

Coupled with the ability to spread AI outputs with extraordinary precision, speed, or boundlessness, AI will become ever more hazardous, especially when in the wrong hands. And so far, no one has shown an effective enough way of avoiding these pitfalls.

If AI really is the technology that will ‘save the world,’ and ‘make everything better’ for everyone—a questionable premise, to be sure—then a critical path toward preventing it from exacerbating human deviance is to put its ownership in the hands of everyone so that society can see to it that it actually does either or both of those things. It should not serve as another piece of societal wealth stolen by the bigoted, petulant trolls who have expressed nothing but disdain for the very society they purport to intend to save.

* * *

To read more of my commentaries on AI, check out the articles below.

Venture Capitalist Makes a Strong Argument for Regulating AI (without meaning to)

Visit the Evidence Files Facebook and YouTube pages; Like, Follow, Subscribe or Share! Marc Andreessen has published a long-winded, self-indulgent piece on the future of AI, that includes a broad range of “analysis” on what he thinks people are right—and, mostly, wrong—about regarding their fears about the future of an AI-driven world. Andreessen is a ve…

Why AI Will Never be Conscious

Visit the Evidence Files Facebook and YouTube pages; Like, Follow, Subscribe or Share! Find more about me on Instagram, Facebook, LinkedIn, or Mastodon. Or visit my EALS Global Foundation’s webpage page here. The Evidence Files is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

Under the Hood of ChatGPT

Apologies to all for failing to meet my usual deadline of 3-4 days per post. I was tied up with what turned out to be one of the biggest, most fantastic technology events ever to occur in Nepal - the Softwarica College Tech-Ex 2023. You can read about the lead-up to it

GPT-4V - an LLM with "Vision"

Visit the Evidence Files Facebook and YouTube pages; Like, Follow, Subscribe or Share! The Evidence Files is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber. On September 25, 2023, OpenAI released a

I am the executive director of the EALS Global Foundation. You can follow me at the Evidence Files Medium page for essays on law, politics, and history follow the Evidence Files Facebook for regular updates, or Buy me A Coffee if you wish to support my work.

Thank you for this article! It touches on many of the possibilities and concerns I've been unable to fully articulate, and does it comprehensively. I especially appreciate the word "transperspectivism," which perfectly expresses the philosophical position I’ve been trying to express in one of my own essays. I didn't know there was a word. Now I do! Finally I really appreciate too your notes on evolved and social experience, which gives us humans the ability to make quick decisions on the fly, and how these can't be translated into analogous AI circuits.

I really think a human will make AI do bad things and we will believe it's alive ..