Image by author

As often happens on the holidays, conversations arise that leave the participants in very different places about some elements of perceived reality. I had just such a discussion recently, but it led me to pondering a very specific nugget that, frankly, did not consume much time during our exchange.

Someone made a rather conclusive statement, then quickly moved on as if it was universally accepted, but I was not prepared to so quickly dismiss it. Paraphrased, the basic flavor was “man cannot know everything.”

Indeed, the idea is not new among the philosophic ponderings on human progress. Some books of faith frequently pronounce mankind’s ostensible limits as a warning against sacrilege. Certain thinkers have noted that while knowledge may be limitless, human lifetimes are not. The Chinese philosopher Zhuangzi wrote, “to drive the limited in pursuit of the limitless is fatal.” These proscriptions often target the individual, cautioning against the presumption that any single one can know everything, an admonishment against the hubris that destroyed many apocryphal and historical figures alike.

The concept of what is “known” seems to have taken an acute battering in these days of abundant conspiracy theories and the “do-your-own-research” crowd, giving tangible proof of the wisdom of such omens. This is not an article about that, however. Rather, I was drawn to the notion of a concrete limitation, a universal constant at which knowledge acquisition effectively must end, similar to the conception of the speed of light as the cosmos’s ultimate governor on interstellar travel.

The questions posed are thus: Is there an actual limit to human knowledge? If so, is the quantification or identification of the line itself knowable? Is there a difference, specifically as it relates to restrictions, between knowing and comprehending?

Before starting, some clarification is required. While there is an enormous philosophical canon on the subject, the terms herein are applied in the most simplistic sense. “We” refers to humanity generally. It might include primarily a subset of people, such as scientists or mathematicians, but it means that anybody with the gumption and ability could theoretically learn the topic.

“Know” here means to be aware of, but does not imply technical knowledge. As an example, most people know cryptography exists and for what it is generally used. This differs from “comprehend.” To comprehend means to grasp the fundamental principles behind a thing we know.

Returning to cryptography, then, we know it exists and what it is used for (protecting our data on the internet or coding military messages), but we also comprehend how it works. On the latter, comprehending requires understanding the mathematical and programmatic principles necessary to implement cryptography in some real-world application.

“Knowledge” here means that which we know and is refined by understanding. Knowledge is not presumptively correct, but evidence and testing suggests its tendency toward being accurate. For the purpose of this article, the knowable and comprehendible information discussed involves what could fairly be called objective—measurable, testable, and repeatable. It does not include subjective fields, sometimes referred to as “the liberal arts.” These fields include history, theology, and philosophy, among others. One might say that this treatise represents a technician’s view as opposed to a sophist’s.

To Know

“Any fool can know. The point is to understand.” Albert Einstein’s pithy quote illustrates the divide between the two concepts. To know is to discern certain phenomena based on our shared reality. No reasonable person[1] questions the effect of gravity, which is why people dislike heights, fear flying, or otherwise avoid situations exposing them to the calamitous effects that come from the end result of gravity’s influence (it is not the fall that kills you… it is the sudden stop at the end).

Yet, knowing does not always comprise understanding, an absence which routinely leads to knowing that which is wrong. Perhaps the most famous example is the long-held belief that the Earth sat at the center of the solar system while the sun and neighboring planets revolved around it (the geocentric model). From an observational standpoint, this made sense for some time given the availability of information and tools to test it.

People now understand the corrected model (actually first imagined in ancient Greece by Aristarchus of Stamos) and an abundance of subsequent scientific inquiries provide evidentiary support and experiments that have verified its veracity. These two cases—gravity and the solar system’s configuration—illustrate that the human ability to know almost certainly remains boundless.

Aristarchus's third-century BC calculations on the relative sizes of (from left) the Sun, Earth, and Moon, from a tenth-century AD Greek copy (public domain)

Curtailing knowing is only the potential of a phenomenon to go unnoticed, a status eroded by the passage of time and the growth of the population. In other words, virtually any happening that attracts human attention can lead to knowing it and the eonic age and size of society increases the chances of almost anything (or everything) grabbing that attention. The limit to knowing seems only a function of human curiosity, need, and time.

Standing on the Shoulders of Giants

Regardless of the foolishness to which knowing might lead, it is a necessary component to comprehension. The logical sequence is glaringly apparent—we cannot presume to understand a thing for which we do not yet have awareness. Even in the case of the unseen, such as bacteria or subatomic particles, we become aware through the observation of their related effects leading us down the road of comprehension.

As so often happens, we observe something we cannot explain, which creates an inquisitive desire to learn. From there, through the course of that inquiry, we begin observing new phenomena that expands our awareness while simultaneously increasing the number of questions resultant from our inquiry. This raises the number of observations, and the situation repeats. So, knowing derives both from observing and questioning. But it neither starts nor ends there.

The foundation of knowing actually comes primarily from the progression of knowing-to-comprehension conducted by others. Put simply we know, understand, or both, many things not because of our intuitive awareness or studious inquiry, but from the efforts of those who came before us. Most people, for example, understand the importance and purpose of antibiotics. But this comprehension (and its prerequisite knowing) did not arise out of our own examination.

Like many of the things we purport to know and understand, that knowledge emerged instead because we relied upon others who have previously and do currently commit themselves to pursuing a sophisticated examination and, eventually, understanding and then relay it to everyone else.

Even the comprehension of the simplest of functions in society halts at the knowing stage for most people because we simply do not need to endeavor to understand. Some of the most brilliant people with whom we associate might be unable to describe the mechanics of a photocopier because frankly they need not spend the time to learn to do so. Our brains have limited storage space, after all, so economizing space remains a priority, and perhaps an evolutionary imperative.

Moreover, the progression of science and technology requires paying homage to the need for efficiency. When the COVID pandemic started, scientists did not dither with the basic principles of virology or vaccinology, they attacked the problem armed with the knowledge of their predecessors who already did that work. When a person’s computer fails, most do not bother with spending weeks or months studying the architecture of operating systems, they bring the device to a specialist already trained in the field.

The entirety of human history has developed this way, both to economize progress but also because it is necessary for overcoming increasingly complex obstacles. Basic sense dictates that we would not wait around as a society for computer scientists to independently develop calculus before embarking upon solving complicated computational problems, or for architects to re-dream up the Pythagorean Theorem before designing a building.

The notion sounds, on its face, exceedingly ridiculous. Yet, conspiracy theorists and “deniers” of climate change or vaccination efficacy (or whatever the flavor is this week) ask you to do just that.

This is not to say that such reliance demands an uncritical acceptance of previous conclusions. Indeed, the pinnacle of groundbreaking achievement routinely happens as a result of an enterprising researcher noting some peculiarity in an otherwise widely-accepted principle. But, just as knowing predicated on mere belief is inadequate, so too is asserting that a principle is deficient based merely on an intuition or ideological predilection.

The absence of a full comprehension of particular provisions of evolution, for example, does not render the entire concept to the status of fanciful belief. For a more blunt illustration, scientists do not yet understand even some of the elementary aspects of gravity, but no one disputes its existence or observable function. These intellectual “holes” do not offer a reason to discard anything, rather they invite a deeper inquiry that must comprise creativity, studious research, and sometimes a bit of luck to bridge the gap between knowledge spaces.

To know, then, is a fundamental element of a successful society, but one that comes with inherent danger. Knowing both with certitude and without understanding often becomes the tool of the ignorant or malevolent to impose upon others an intellectual stagnancy. They engage in this sort of villainy to obfuscate their individually limited capacity for comprehension or their desire for obtaining or maintaining power.

The impetus behind funding and supporting compulsory education exists, in part, to curtail this propensity. But knowing without comprehension is not intrinsically bad; it is a necessary component of civilized society, particularly one that wishes to progress into new scientific, technological, and other frontiers. Knowing without comprehension on some matters frees up the time, effort, and dexterity of thinking to achieve comprehension on others.

To manifest that success, knowing demands responsibility—a recognition of that which we do not know or understand, and a trust in those who endeavor to appropriately fill the gaps for us leaving us free to turn our attentions to those concepts for which we find greater interest or ability. Through this evolution, the human capacity for knowing seems limitless.

Comprehension

Comprehension consists of a much more nuanced arc than knowing. As discussed, knowing relies on assertions, which may or may not carry the validity of the foundational principles of science—measurement, testability, and reproducibility. As the commitment to knowing does not require the same rigor as does understanding, it can lead to the conflation of knowledge with belief.

Comprehension, on the other hand, all but dispels that confusion. We know of no objective way to determine “full” comprehension of almost any concept, and perhaps none exists. Expertise, rather, necessitates the recognition of two key features of any intellectual framework.

First is realizing with sophistication what elements of the framework are testable and reproducible such that little to no evidence exists indicating any inherent flaw. This still does not mean such portions of a theoretical model are perfect or infallible, but it suggests that they remain reliable absent any affirmative discovery to the contrary.

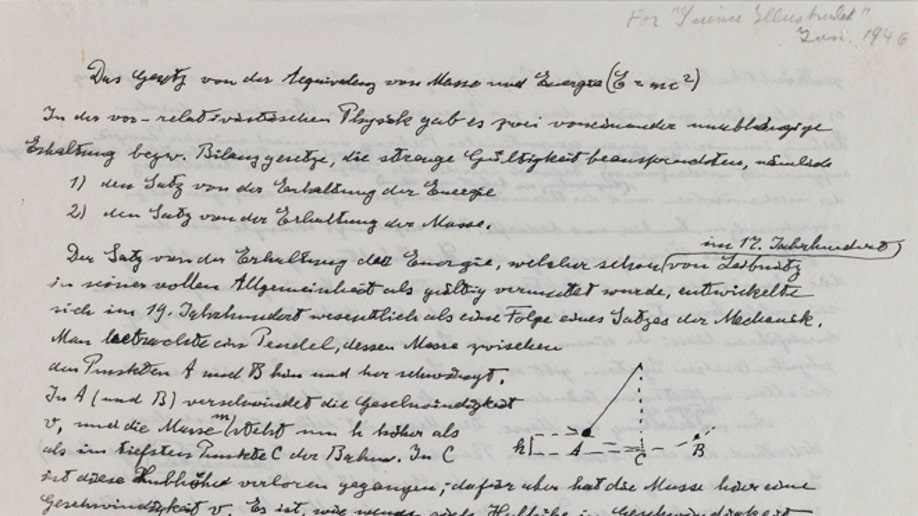

Einstein’s Theory of Relativity met with uncertainty and even skepticism in the scientific community, no doubt driven by its intensely radical ideas. More radical proposals tend to invite greater criticism, scrutiny, and hesitancy. As the saying goes, major assertions require major evidence (or something like that). Einstein provided an extraordinary articulation of his theory, but its conspicuous deviation from classical physics resisted acceptance without a great deal of corroborating evidence. Subsequent observations and experiments over the following decades eventually led to consensus among scientists that Einstein had it right.

March 19, 2012: Part of a newly revealed archived document, one of only three existing manuscripts which contain Einstein's famous formula on the special theory of relativity. (AP Photo/Hebrew University of Jerusalem)

Expertise, consequently, has a second feature—the recognition of the frailties of a concept. Just as an abundance of testing verified Einstein’s Relativity in the years following, further advancements in quantum mechanics revealed acute flaws. These flaws do not demand discarding the theory. They instead open the door to further lines of inquiry. Quantum theory led to observations that, at a minimum, indicate our understanding of physics is incomplete. Einstein’s Relativity still holds in certain contexts, but its breakdown at the quantum level postulates the presence of another canon of principles, a portion of which we do not yet know, let alone comprehend.

The value of comprehension—that which elevates it above knowing—is in its utility of application. One may academically know, for example, how a cellphone works. Without comprehension, however, that same person might be no closer to resolving a problem with one than someone who knows very little or nothing at all. Even topics of such abstraction as quantum mechanics eventually prove to possess utility upon a significant enough deepening of comprehension.

Quantum computing provides a stark illustration. While not yet incorporated in our day-to-day world, there appears little question that it will soon. To reach that implementation, scientists first had to achieve comprehension of complex oddities of quantum mechanics such as entanglement or, as Einstein characterized it, “spooky action at a distance.” Furthermore, the uses to which quantum computing might eventually be put require the building blocks of understanding among other subjects. In this case, that comprises advanced mathematics, physics, quantum mechanics, encryption, computer science, thermodynamics, and more.

Limits?

Having expounded (hopefully) enough on the principles of knowing and comprehending as I have defined them, we are left to decide if their reach has a limit. Certain concepts seem to indicate that a limit does indeed exist. Physicists have aptly named one of them, the Heisenberg Uncertainty Principle. Generally, “it states that if we know everything about where a [subatomic] particle is located (the uncertainty of position is small), we know nothing about its momentum (the uncertainty of momentum is large), and vice versa.”

Perhaps the opposing view is what we call causal determinism. This idea posits “that every event is necessitated by antecedent events and conditions together with the laws of nature.” Put another way, the details of every event can be measurable if the examiner has sufficient information of prior events or conditions.

Curiously, both notions are valid in a sense because examples from each can be objectively shown in experimental analysis. Yet, both remain equally deficient—at least for the moment—because they each contain a deterministic grammar component (“every” or “everything”) that cannot be universally proved or disproved.

This uncertainty gives weight to the naysayers’ proposition that humans cannot “know everything.” In this case, the Heisenberg principle presupposes that the measurement of one aspect effectively prevents the measurement of another. We can know that a state of being exists in each instance—a particle’s location and momentum—but we cannot comprehend the value of both at the same time.

Conversely, causal determinism suggests that the absence of measurability seemingly imposed by the Heisenberg principle is a mere consequence of incomplete information. In fact, the de Broglie-Bohm theory (often referred to as Bohmian mechanics) proposes that the uncertainty of Heisenberg represents “only a partial description of the system,” another form of the argument that the principle represents a state of insufficient information, not an a priori limitation. It explains it as follows:

In particular, when a particle is sent into a two-slit apparatus, the slit through which it passes and its location upon arrival on the photographic plate are completely determined by its initial position and wave function.

In other words, the ostensibly surprising results of the double-slit experiment are explained by the contextual antecedents of the particle emitted, specifically its position and wave function. A causally determinative explanation.

The double-slit experiment illustrated. Credit: NekoJaNekoJa, Johannes Kalliauer, CC BY-SA 4.0.

Ashley Hamer describes what the double (or two) slit experiment showed, why it created a sort of existential crisis in physics, and how it led to questioning the potential of ever definitively explaining certain goings-on in quantum physics (slightly edited for clarity).

English scientist Thomas Young… set up an experiment: He cut two slits in a sheet of metal and shone light through them onto a screen… If light were indeed made of particles, the particles that hit the sheet would bounce off and those that passed through the slits would create the image of two slits on the screen, sort of like spraying paint on a stencil. But if light were a wave, it would do something very different: Once they passed through the slits, the light waves would spread out and interact with one another. Where the waves met crest-to-crest, they'd strengthen each other and leave a brighter spot on the screen. Where they met crest-to-trough, they would cancel each other out, leaving a dark spot on the screen. That would produce what's called an "interference pattern" of one very bright slit shape surrounded by "echoes" of gradually darker slit shapes on either side…

By using a special tool, you actually can send light particles through the slits one by one. But when scientists did this, something strange happened. The interference pattern still showed up… The photons seem to "know" where they would go if they were in a wave. It's as if a theater audience showed up without seat assignments, but each person still knew the exact seat to choose in order to fill the theater correctly.

As Popular Mechanics puts it, this means that "all the possible paths of these particles can interfere with each other, even though only one of the possible paths actually happens." All realities exist at once (a concept known as superposition) until the final result occurs. Weirder still, when scientists placed detectors at each slit to determine which slit each photon was passing through, the interference pattern disappeared. That suggests that the very act of observing the photons "collapses" those many realities into one.

Famous physicist Richard Feynman described these results as “a phenomenon which is impossible, absolutely impossible, to explain in any classical way, and which has in it the heart of quantum mechanics. In reality it contains the only mystery.” Feynman highlighted the quintessential paradox arising out of quantum mechanics, that the “theory implies that measurements typically fail to have outcomes of the sort the theory was created to explain.”

Bohmian mechanics, however, possibly provides the explanation that mystified Feynman. Its “guiding equation” adopts a holistic approach of measurement that includes “the system upon which the experiment is performed as well as all the measuring instruments and other devices used to perform the experiment (together with all other systems with which these have significant interaction over the course of the experiment).” Nevertheless, Anthony Leggett asserts that Bohmian mechanics does not in fact resolve the measurement problem, calling it “little more than a reformulation” of the current understanding. Others agree with Leggett for varying reasons.

Regardless, what this debate illustrates is the methodology for how we reach comprehension, even on the most vexing problems. Whether Bohmian mechanics resolves the seeming limitations imposed by the uncertainty principle matters less than the fact that physicists continue to chip away at the problem and have proffered some solutions that perhaps only require further development necessary to obtaining consensus. In this case, then, it appears that comprehension is in fact achievable, abrogating any cognizable limitation.

Another concept that twists the brain into knots is infinity. When people think of infinity, the tendency is to imagine something “endlessly big,” without limits, or larger than comprehension. How does one comprehend that which has no apparent confines, no borders to give shape to the thing we seek to describe? For that matter, how can a “thing” be a thing at all if it has no demonstrable shape, configuration, volume, beginning, or end?

From a philosophic standpoint, infinities both create and dispel paradoxes, a matter which perhaps further sullies our odds of accomplishing any comprehension of the idea. As an example:

In the 5th century BCE the Pythagorean Archytas of Tarentum gave the following argument for the spatial infinitude of the cosmos based on the contradiction that postulating a boundary to it would seem to entail. If the cosmos is bounded, then one could extend one’s hand or a stick beyond its boundary to find either empty space or matter. And this would be part of the world, which thus cannot be bounded on pain of contradiction. So the world is unbounded. Archytas identified this with the world being infinite.

For Archytas, the existence of infinity essentially dispelled the contradiction that emerges from delimiting the cosmos. Bordering the cosmos, in this view, creates some other thing outside of that border, an unidentifiable thing whose existence is itself paradoxical. In other words, if all of existence is inside of some arbitrary border, than anything outside of that border cannot be existent, but a border implies something existing on each side of it, and thus a paradox is born.

Aristotle, narrowed the idea; he described a ‘potential infinity’ vs ‘actual infinity’. The former comprised an item with a beginning and an end, but with an end that could extend infinitely. A sequence of natural numbers, for instance, contains a definitive beginning and end, at least until one opts to add another (it resumes having an end once one stops adding numbers). Actual infinity comprises a “completed” system whose components are unending or uncountable. Between the numbers 1 and 2, which constitute the completed set, there is 1.1, 1.2, 1.3, etc. Where infinity comes in is by virtue of the fact that one can extend these subsets forever. Thus, still within this completed set of 1 to 2, one can now add 1.11, 1.12, 1.13, 1.111, 1.112, 1.113, etc. And on, and on.

Potential infinity is one which occurs over time. As time progresses, new pieces can be added to an item, lasting for as long as time lasts, or forever. Actual infinity denotes a set moment in time. An enclosed system may be broken down ad infinitum in any one moment, but the system survives in its completed state.

Aristotle illustrated this in his resolution of Zeno’s Achilles paradox. In short, the paradox suggests that despite moving more swiftly than a tortoise, Achilles will never catch it if each of his subsequent steps must overtake an infinite distance to reach the next. An elementary analysis of the problem, of course, indicates that while such infinities may exist, they do not seem to affect the macro-result: Achilles will catch the tortoise. Thus, the infinity within the set is delimited by the timeframe of the external completed set, even though the content within the set is unending. Does your brain hurt yet?

The analysis of infinity seems, well, infinite. So far, we have discussed only the philosophic perspective, the academic exercises. But, how does the concept of infinity affect the way we do things? Or, to put it within the lexical framework of this article, how does infinity fit into some real-world application? One example with which most people are probably at least loosely familiar are fractals. Sand dunes are an example.

Source: Weighted Linear Fractals

Fractals are a “recursively created never-ending pattern” displaying self-similarity. Mathematician Benoit Mandelbrot developed this methodology to explain the seemingly chaotic representations we see in the natural world—clouds, mountains, sand dunes, and so forth. Fractal geometry also helps explain the apparent disorder in systems, such as financial, biological, or geological. Chris Byrd explains how they work.

Most fractals are generated by taking a set of inputs and applying them as inputs to some sort of equation. The output of this equation is then fed into itself again. This feedback is repeated over and over again until the desired number of iterations passes, or until the behavior of the values is determined.

An important element of fractals is that they remain constant over a magnitude of scales. To see how they inform certain scientific disciplines, look at the formation of the bronchial tree.

The bronchial tree can be visualized as a fractal structure, the final form of which is generated by an iterative process akin to that described above for the Koch curve [citations omitted]. The primordial lung bud initially undergoes a bifurcation to form the right and left bronchi, and subsequent generations are formed by a repetitive bifurcation of the most distal airways. In this manner, a dichotomously branching network is produced, filling the available space.

Source: healthiack.com

Fractal analysis of complex biologic structures like the bronchial tree enables medical researchers to create highly accurate models that illustrate the form and function. In applying the principles of fractal growth, scientists have modeled the development of the vascular tree that reflect changes as the embryo develops. Upon breaking down the complexity of the lung, scientists have since turned fractal analysis toward mapping the growth and spread of diseases of the lung with an eye toward achieving a “better understanding of the structural alterations during the progression of COPD and help identify subjects at a high risk of severe COPD.” Understanding the conceptual infiniteness of fractals clearly has implications for crucial areas of scientific study and application.

Infinity has other applications, such as describing the breakdown of Relativity and physical theory in black holes, otherwise known as the singularity. The value of infinity in this case may simply reflect an erroneous understanding of what is happening in this critical part of the black hole. Put differently, the presence of an infinite value to describe a real place provides the motivation for a revised inquiry into certain physics concepts as it suggests the inadequacy of our current understanding.

The Grossone method, another principle arising out of our understanding of infinity, has applications in computational mathematics and Cosmic Continuum Theory. Physicists once used Renormalization to deal with infinities in particle physics, a process theoretical physicist David Tong called “arguably the single most important advance in theoretical physics in the past 50 years.”

The point here is that while it may seem incomprehensible to imagine something that is ostensibly endless, we nonetheless understand the concept to the point that it can be applied toward real-world uses. As such, it seems more of a semantic argument whether a limitation exists on our ability to grasp infinity. For, in this case, the question becomes more about what a “limitation” itself means, and thereby leads us down an infinite rabbit hole of futility.

A Knowledge Constant

Dictionaries define a constant in two ways: 1) A fixed and well-defined number or other non-changing mathematical object. The terms mathematical constant or physical constant are sometimes used to distinguish this meaning. 2) A function whose value remains unchanged (i.e., a constant function). Such a constant is commonly represented by a variable which does not depend on the main variable(s) in question.

In either case, a constant remains stable, unchanging. But, there is another way to identify a constant. The speed of light provides the quintessential example. It is not the quantitative speed that makes light a constant. Rather, it is that the speed of light does not change irrespective of the observer or the emitter. In this way light is the ultimate constant because no external observational factor influences its essential quality. Like everything else discussed herein, however, a deeper analysis leads to a less simple result. Even the speed of light, it turns out, objectively changes depending upon the medium through which light passes.

If the principal constant of the universe changes, it is hard to imagine any more stringent a constant exists in another context. Thus, applying the notion of a constant to knowledge seems to be a fruitless exercise. Without a conceptual framework comprising all of the knowledge in the universe, there appears no way to set a governor on the acquisition or comprehension of knowledge.

The logical fallacy is that discerning a limit on the availability of knowledge to comprehend necessarily requires knowing at least something about that which exceeds that limit (the Archytas paradox). Even when it comes to the expansion of the universe, scientists have conceptualized what such expansion means (it is potentially incorrect to say “space” is expanding into “something else”).

Therefore, to impose a limitation on knowledge—a knowledge constant—we would still need to propose something beyond it for it to make any sense. By doing so, it seemingly would incite “knowing” or becoming aware of something that exceeds the knowledge available to us. Therefore, at a minimum knowing has no limit.

Because knowing inevitably leads to comprehension, or the pursuit thereof, the capability of knowing purportedly unobtainable knowledge also erodes any potential restraint on understanding, perhaps down to none. In sum, we can never know if humans can comprehend everything because the mere act of knowing that we cannot invites a paradox that requires us to know that which we cannot.

Enfin

Deciding whether there exists some limit to human comprehension suffers from the victimization of the limitations of language. As language is a less precise representation of reality than other methodologies, such as maths, it acutely falters when targeted toward especially complex ideas.

In the present case, for example, we have defined comprehension as to grasp the fundamental principles behind a thing we know. To grasp, comprehend, apprehend, know, register, or whatever other words we use will inevitably mean somewhat different things to different people. That ambiguity leaves open both honest and dishonest disagreement. For that reason, this article does not attempt to assert that humans can “know everything” as such a proposition would require somehow quantifying or identifying “everything” there is to know.

Instead, the question relies on a similar assessment as that which informs infinity. Essentially, we do not need to count every character within infinity in order to comprehend, explain, and apply the principle. Likewise, we need not delineate everything and assign a value to it (knowable or comprehendible) to argue whether everything is able to be known or comprehended. In my view it is, but we can never actually confirm it. As Maarten Boudry writes,

It is quite true that we can never rule out the possibility that there are such unknown unknowns, and that some of them will forever remain unknown, because for some (unknown) reason human intelligence is not up to the task.

But the important thing to note about these unknown unknowns is that nothing can be said about them. To presume from the outset that some unknown unknowns will always remain unknown, as mysterians do, is not modesty – it’s arrogance.

A Last, Last Word

Unfortunately, we can complicate the problem one final step further. Without characterizing or quantifying everything, it might matter whether everything is—or should be—refined in some way for it to even make sense. If, for example, parallel universes exist, but their content can never overlap, does it make sense to include the content from such an untouchable universe when determining whether everything is knowable in this one?

Similarly, if the Big Bang represents the beginning of “time” in our iterative existence, should our definition of everything include whatever comprised everything in some extant plane outside of that existence, i.e. that which came before time?

Read Maarten Boudry’s full piece on knowledge in The Conversation, here.

* * *

I am the executive director of the EALS Global Foundation. You can follow me at the Evidence Files Medium page for essays on law, politics, and history follow the Evidence Files Facebook for regular updates, or Buy me A Coffee if you wish to support my work.

[1] This article assumes something akin to the reasonable person standard in law when referring to a “person” or “people”: a person with an ordinary degree of reason, prudence, care, foresight, or intelligence whose conduct, conclusion, or expectation in relation to a particular circumstance or fact demonstrates a rational or logical basis.

Had to read that twice and a few sentences a few times .. great mind you have.. without all the words I completely view things the same as you .