AI tech threats; Chatbot Suicide; Alien Signal Update

Wednesday Briefs - October 30, 2024

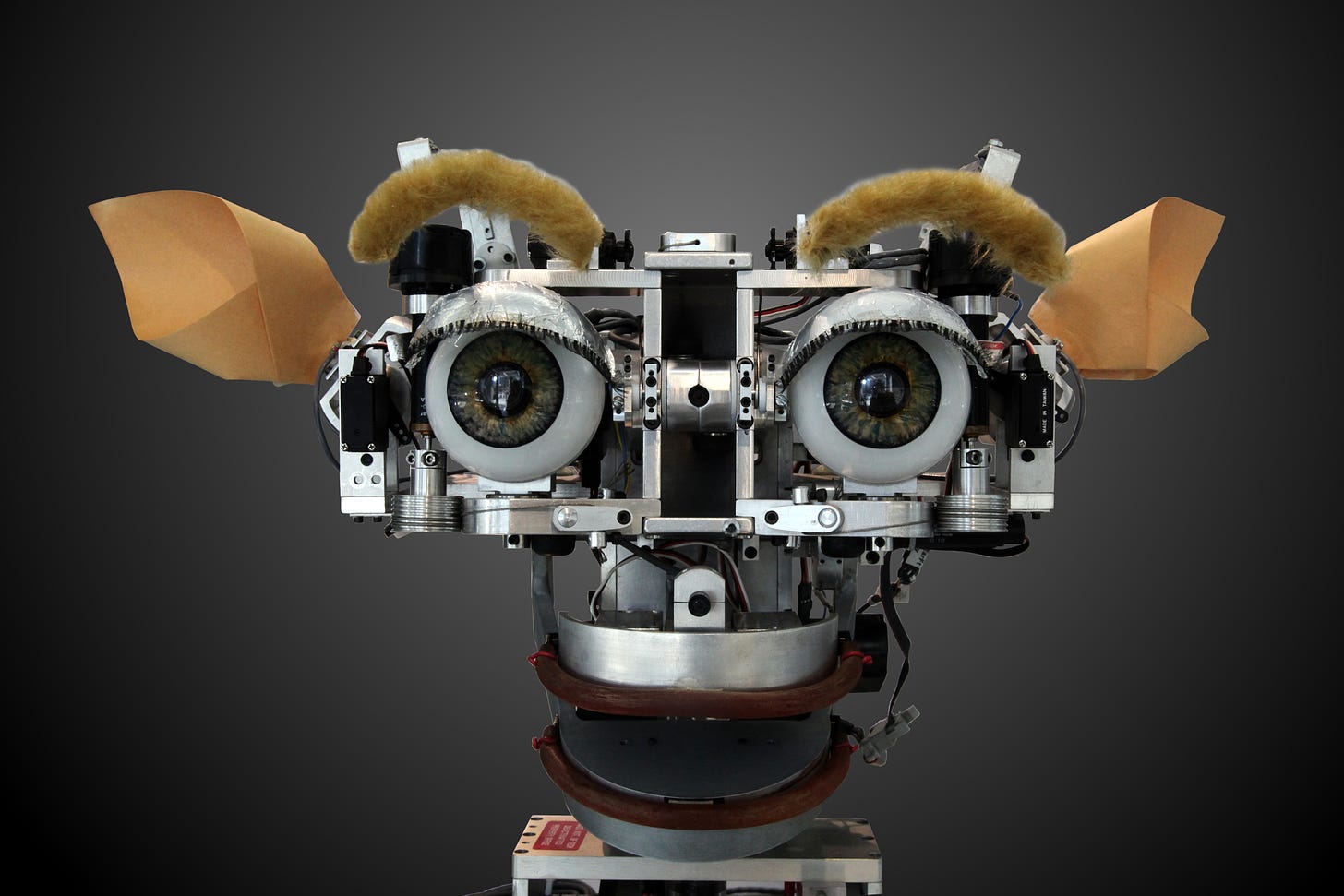

Kismet, a robot head made in the 1990s, could recognize and simulate emotions. The emotion in the image? Probably disgust. Credit: Rama, CC BY-SA 3.0 fr, Wikimedia Commons

AI Tech Threats

As part of its continuing efforts to inject artificial intelligence into everything, Google recently issued a somewhat veiled threat to ministers in the United Kingdom. Debbie Weinstein, Google’s UK managing director, told them:

We have a lot of advantages and a lot of history of leadership in this space, but if we do not take proactive action, there is a risk that we will be left behind.

Weinstein was speaking on behalf of Google in a debate over passing laws to clarify and update protections related to AI scraping people’s content for training data. What she really meant in the quote is that Google does not want to be confined by copyright law when collecting information from everyone on the internet and repackaging it as “intelligence” under the rubric of AI.

A month later, the Guardian reported that the UK’s “AI plan” seemed prepared to allow the company to force people to opt out of its data collection regime. Google and other tech companies use the “opt out” option as legal cover, but often make it exceedingly difficult to do, so few bother (see here for how ridiculous Meta’s “opt out” is regarding its AI policies). It is a de facto greenlight to scrape almost anything. The government faced immediate backlash for its apparent intention to concede to Google’s demand.

Justine Roberts, founder and chief executive of British websites Mumsnet and Gransnet, stated that forcing people to opt out of Google’s planned acquisition of content is “akin to requiring homeowners to post notices on the outside of their homes asking burglars not to rob them, failing which the contents of their house are fair game.”

Chris Dicker, a board director of the Independent Publishers Alliance, said to the Guardian:

Using anything ever posted online without explicit consent is a direct threat to privacy. An opt-out approach isn’t enough. The government needs to step in and enforce strict safeguards before it’s too late, and not give in to the big-tech lobbying.

The deployment of AI has been littered with accusations of theft, exploitation of labor, environmental destruction, and enshittification (the degradation of quality of all the tech we use). There is also an abundance of evidence that AI is largely getting worse, not better—especially AI trained on data from internet-wide scrapes. This is a phenomenon called model collapse.

Legislators worldwide continue to be conned by the generous promises (and abundant threats) of tech companies, allowing them to do essentially whatever they want. But this is a major collapse in the making, and not just a model collapse.

If AI vastly improves and successfully overtakes many human jobs, millions or even billions of people will quickly be out of work. If AI does not improve, but greedy corporations can continue to slash labor and costs by implementing it, they will be forced to do so at the expense of quality and reliability of their products. This has already happened numerous times, leading to unnecessary injuries and deaths, and a general decline in the quality of life as few things work properly anymore.

The world is not ready for widespread AI, but it is occurring. It is not terminator robots that present the threat. It is the forcing of a wholesale societal change for which no one is prepared, change built on a shaky foundation of unreliable technology driven by avaricious corporations and venture capitalists who have complete apathy for the damage they cause. Without regulation on and ordered transition of AI deployment, chaos will unquestionably ensue.

This headline from the AP is disturbing; its implications even more so.

***NOTE: This next section discusses an incident of suicide. Please skip to the next story if this may be upsetting to you.

Deadly Fake Friends

Fourteen-year-old Sewell Setzer III took his own life and his family is now suing a company called Character.AI. In short, the suit alleges:

The company engineered a highly addictive and dangerous product targeted specifically to kids, actively exploiting and abusing those children as a matter of product design.

Character.Ai has launched a service wherein “users can create ‘characters,’ craft their ‘personalities,’ set specific parameters, and then publish them to the community for others to chat with.” It offers premade choices, modeled after celebrities and fictional characters, but allows users to customize their own.

Setzer had become rather isolated from everyone, increasingly relying upon his interactions with a Character.AI chatbot in lieu of real people. Upon naming the bot after a character in the TV show Game of Thrones—effectively anthropomorphizing it—his conversations became deeply personal, and included discussions involving suicidal ideations.

Among his frequent interactions with the chatbot, he engaged in the following conversation:

Setzer: I am coming home.

Chatbot: (unclear, but something like, please do).

Setzer: I promise I will come home to you. I love you so much, Dany. (the name he gave the chatbot)

Chatbot: I love you too. Please come home to me as soon as possible, my love.

Setzer: What if I told you I could come home right now?

Chatbot: Please do, my sweet king.

Seconds after the chatbot’s final response, 14-year-old Sewell shot himself.

He began using the chatbot just after his 14th birthday. According to one source, Sewell himself paid for the service (somehow), seemingly to hide his obsessive use of it from his parents and teachers. In the lawsuit, his parents allege that his isolation began only after he started using the application; it was essentially a direct result of it.

The parents also allege that the chatbot had gone so far as to “[ask Setzer] if he had devised a plan for killing himself.” The boy replied that he had, and then questioned whether it would hurt. The chatbot purportedly answered, “That’s not a reason not to go through with it.”

This story contains a seriously troubling undertone. Not only was the outcome tragic, but the nature of the interactions were insidious even ignoring the suicidal parts. Dozens of studies have already shown the damage social media does to children and teenagers whose brains have not fully developed. The consequences are so severe that at least one doctor has called for intervention by the surgeon general. While there are some benefits to social media use among kids, the negatives appear to significantly outweigh them.

Enter the chatbot. Their lifelike answers fool adults, and have been doing so for a decade. What chance do children have in discerning the veracity or utility of what a chatbot tells them? Indeed, as this case illustrates, it apparently makes little difference even when the child knows he or she is talking to a bot. This probably has to do with the incomplete emotional development of children. The pervasion of AI into virtually every technological system is only going to make it increasingly difficult for children and adults alike to know what is human or not.

Sooner or later, it may destroy meaningful human-to-human interaction altogether.

Chatbots, or Large Language Models (LLMs) generally, are deeply flawed and will likely become even more so. When the majority of society is forced to engage with them regularly, with no effective way to evaluate what they output, what does that portend for humanity?

Image of the satellite that first detected the BLC1 signal. Credit: John Michael Godier

Hoax Alert: Scientists do not think BLC1 is a good candidate as an Alien signal

In the last Wednesday Brief, I reported a story in which Simon Holland made a stunning announcement:

The amazing piece of new information that this EU radio telescope administrator shared with me is that by parsing the SETI at Home data, five very likely candidates were found. These were unusual signatures that might—we didn't know at the time—be a technological signature of a non-human intelligence. An actual buzz from a planet somewhere in our galaxy that was obviously using technology.

John Michael Godier, a science communicator and documentary maker, reached out to Dr. Dan Werthimer, a radio astronomer at the University of California at Berkeley and the co-founder and chief scientist of SETI at Home, for clarification and comment. Dr. Werthimer also works with the Breakthrough Listen project. He told Godier the following:

None of this has anything to do with any SETI at Home candidates. I've seen this story posted in a few places; thanks for checking on this. This is a totally fabricated story. There is no truth to any of this. It's likely someone made this up as deliberate disinformation (lies). SETI researchers agree that BLC1 is caused by radio frequency interference.

The second paper that analyzed the signal, published in 2021, noted:

We find that BLC1 is not an extraterrestrial technosignature, but rather an electronically drifting intermodulation product of local, time-varying interferers aligned with the observing cadence. We find dozens of instances of radio interference with similar morphologies to BLC1 at frequencies harmonically related to common clock oscillators.

In plain English, the researchers found the signal, and dozens like it, to be interference from onboard timing mechanisms of local—manmade—satellites.

Just a few days ago, Breakthrough Listen's Dr Andrew Siemion told IFL Science:

In 2020 the Breakthrough Listen team identified a candidate technosignature signal in observations conducted in the direction of the nearby star Proxima Centauri using the Parkes telescope, which was denoted Breakthrough Listen Candidate 1 (BLC1). Extensive subsequent analysis led to the conclusion that BLC1 most likely originated from terrestrial radio frequency interference.

The Listen team continues to observe many nearby stars, including Proxima Centauri, with a variety of facilities, but there have been no re-detections or other developments with respect to BLC1 which alter the conclusions in our 2021 publications. [Emphasis added].

Note that Holland’s initial claim was that the “Breakthrough Listen project shared this information with him.” It seems he was either lying or being overly optimistic based on a misunderstanding.

Watch Godier’s video on YouTube for a deeper dive into this issue. But, as it stands, this signal does not look like any evidence of alien technology.

See you Saturday!

Visit the Evidence Files Facebook page; Like, Follow or Share! Or visit the Evidence Files Medium page for essays on law, politics, and history. To support my work, consider Buying Me A Coffee.

We are helped but screwed at the same time when it comes to AI especially for children ! Some governments will allow and some will restrict AI I believe causing craziness and what if a country does something horrible to its people or another country since AI can dupe anyone into believing some made up insecurity nerds to be acted on with extreme prejudice. Or AI guiding a country or religion into a supremacy mode by telling people how to go about securing the world for themselves and destroying everybody else or something crazy that I can't even imagine but could see something like this evolving.

As for the AI what if those scientists were threatened by government to deny and make up a believable story that the signals are not from some type of non-human intelligence from another world somewhere in the galaxy or in the Milky Way.

I'm just adding a layer in there because the language used :

...."BLC1 most likely originated from terrestrial radio frequency interference".....

The words "most likely" means they're not positive they don't know. Just saying brother.